AI Policy Weekly #35

China skirts US export controls, Google hires Character AI employees, and US senators scrutinize OpenAI

Welcome to AI Policy Weekly, a newsletter from the Center for AI Policy. Each issue explores three important developments in AI, curated specifically for US AI policy professionals.

Chinese Chip Smuggling Reaches New Heights

In October 2023, the Center for a New American Security (CNAS) published a working paper titled “Preventing AI Chip Smuggling to China,” which assessed the scale of Chinese efforts to skirt US export controls on high-performance AI chips.

At the time, based on limited available information, the authors’ best guess was that “only a relatively small number of controlled AI chips will make it into China in 2023, likely in the hundreds, but plausibly in the low thousands.”

This week, a detailed investigation from The New York Times revealed that China will smuggle far more chips than that in 2024.

For example, one smuggler in Shenzhen told the Times that “companies came to the market ordering 200 or 300 chips from him at a time.”

Strikingly, “a third business owner said he recently shipped a big batch of servers with more than 2,000 of the most advanced chips made by Nvidia.”

This owner smuggled the chips from Hong Kong to mainland China. “As evidence, he showed photos and a message with his supplier arranging the April delivery for $103 million.”

The Times investigation adds to a growing body of evidence that China is smuggling large quantities of export-controlled AI chips.

Indeed, Tim Fist, a coauthor of the 2023 CNAS report, recently said that “I now think our previous estimate of US AI chips smuggled to China was an underestimate.”

“I think the rate right now is more like >100k/year,” said Fist. “If AI capabilities keep growing, we'll look back on this as a massive own goal.”

For reference, Apple used 8,192 high-end AI chips to train a general-purpose language model that powers the company’s newest AI features, and Meta used twice as many chips to build Llama 3.1.

The computational cost of narrow-purpose AI systems can be far cheaper. Researchers at Sony AI and UC Riverside recently trained an advanced image generation model using only 8 AI chips.

Although many export-controlled chips are fueling China’s civilian AI projects, a small number of them may be directly supporting the Chinese military.

As an illustrative example, the Wall Street Journal reported in 2023 that “China’s top nuclear-weapons research institute has bought sophisticated US computer chips at least a dozen times in the past two and a half years,” despite longstanding American export restrictions.

The entity responsible for enforcing chip controls is the Bureau of Industry and Security (BIS) within the Department of Commerce.

Unfortunately, BIS is chronically underfunded. The aforementioned $103 million smuggling shipment alone is worth almost double the entire $57 million BIS budget for export enforcement.

In order to implement stronger enforcement techniques and bolster American national security, BIS will need more funding.

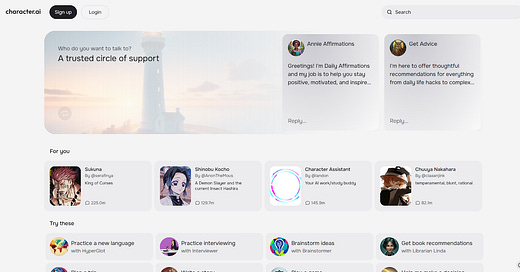

Google Absorbs Character AI Employees

Last summer, smaller AI companies like OpenAI, Anthropic, Mistral, Cohere, Inflection, Adept, and Character all appeared to have a fair chance of building the world’s largest and most capable AI systems.

Now, a year later, large AI companies have effectively acqui-hired three of these prominent AI developers.

To be precise: Microsoft, Amazon, and Google hired many of the AI engineers at Inflection, Adept, and Character, respectively.

The Microsoft-Inflection deal occurred in March, the Amazon-Adept deal occurred in June, and the Google-Character deal occurred just last week.

According to The Information, “Character’s employees working on model training and voice artificial intelligence—about 30 of its roughly 130 staffers—will join Google to work on its Gemini AI efforts.” The departing employees include both the CEO and the president of Character.

Character raised $150 million in early 2023. Since then, it has struggled to raise additional funding. Its $10 monthly premium service has under 100,000 subscribers.

$150 million may sound like plenty, but today’s cutting-edge AI models can cost hundreds of millions of dollars to build.

Further, the cost of frontier AI development is likely to grow exponentially over the coming years.

As a result, wealthy companies like Google—which earned over $80 billion in revenue last quarter, more than the GDP of Slovenia—have a significant advantage over smaller AI developers.

Big AI projects are becoming a costly endeavor that only Big Tech can afford.

Senators Scrutinize OpenAI’s Whistleblower Policies

Earlier this month, Senator Chuck Grassley (R-IA) sent a letter to OpenAI asking for information about the company’s whistleblower protections.

The letter references a recent complaint to the Securities and Exchange Commission (SEC) from OpenAI employees, who allege that OpenAI violated SEC whistleblower protections with restrictive employment, severance, non-disparagement, and nondisclosure agreements.

Senator Grassley expressed concern about these practices. He also cited employee reports that OpenAI rushed through safety testing protocols before the launch of GPT-4o.

OpenAI has until August 15th to answer Senator Grassley’s questions, including a request for copies of updated exit agreements. Given that the senator’s staff separately requested records on July 19th and July 29th, OpenAI appears to be reluctant to share these documents.

Senator Grassley’s letter comes shortly after Senators Brian Schatz (D-HI), Ben Ray Luján (D-NM), Peter Welch (D-VT), Mark Warner (D-VA), and Angus King (I-ME) sent a separate letter asking about OpenAI’s safety promises, whistleblower protections, security practices, and more.

OpenAI has responded to this earlier information request.

“OpenAI will not enforce any non-disparagement agreements for current and former employees, except in cases of a mutual non-disparagement agreement,” wrote Jason Kwon, OpenAI’s Chief Strategy Officer.

According to the response, OpenAI recently created a 24/7 internal “Integrity Line” for employees who wish to anonymously “raise concerns, including about AI safety and/or violations of applicable law.”

These reformed policies are beneficial, but they are also voluntary, opaque, and reversible.

To solidify safety culture at cutting-edge AI companies, the Center for AI Policy believes that basic whistleblower protections should be made mandatory.

Job Openings

The Center for AI Policy (CAIP) is hiring for three new roles. We’re looking for:

an entry-level Policy Analyst with demonstrated interest in thinking and writing about the alignment problem in artificial intelligence,

a passionate and effective National Field Coordinator who can build grassroots political support for AI safety legislation, and

a Director of Development who can lay the groundwork for financial sustainability for the organization in years to come.

News at CAIP

Episode 10 of the CAIP Podcast features Stephen Casper, a computer science PhD student at MIT researching technical and sociotechnical AI safety. Jakub and Cas discuss AI interpretability, red-teaming and robustness, evaluations and audits, RLHF, Goodhart’s law, and more. Tune in here.

Mark Reddish wrote a blog post titled “AI Voice Tools Enter a New Era of Risk.”

ICYMI: We hosted a webinar titled “Autonomous Weapons and Human Control: Shaping AI Policy for a Secure Future,” featuring Professor Stuart Russell and Mark Beall.

Quote of the Week

A.I. Al Michaels sounds pretty much like Al Michaels.

To my ears, the A.I.-generated Al sounds a bit flatter than real Al, like soda that’s lost some fizz. I’ve also noticed an occasional odd pronunciation or an inhumanly short pause between sentences. Mostly, though, I forgot this was an A.I. doppelgänger.

—Shira Ovide, a strategy editor at The Washington Post, writing about NBC’s Olympic highlight reels, which use an artificial voice clone of American sports commentator Al Michaels

This edition was authored by Jakub Kraus.

If you have feedback to share, a story to suggest, or wish to share music recommendations, please drop me a note at jakub@aipolicy.us.

—Jakub