Welcome to AI Policy Weekly, a newsletter from the Center for AI Policy. Each issue explores three important developments in AI, curated specifically for AI policy professionals.

Anthropic Competes at the Frontier

Anthropic is one of the best AI companies in the world. In 2023, it quickly grew from 85 employees to roughly 300, while raising billions of dollars from Amazon and Google.

This week, Anthropic released its most powerful AI system to date, Claude 3 Opus. The deployment solidifies Anthropic's position among the AI elite, joining Google and OpenAI to form a trio of companies that can credibly claim to offer the planet’s most generally capable AI systems.

Identifying an obvious winner among the AI systems offered by Anthropic, Google, and OpenAI—namely, Claude 3 Opus, Gemini 1.5 Pro, and GPT-4—is difficult, given the systems’ comparable scores across a range of assessments. An additional complication is that OpenAI has not released official test results for its latest update to GPT-4, GPT-4 Turbo.

Nonetheless, Claude 3 Opus appears to have a small but consistent edge, and there are some tests where Claude 3 Opus impressively outperforms its competitors, such as the Graduate-Level Google-Proof Q&A (GPQA) Diamond Benchmark.

GPQA Diamond is a particularly challenging test, consisting of questions written by PhD students and graduates in biology, physics, and chemistry. These questions are designed to stump PhDs from unrelated fields, even with unlimited time and full access to the internet to research the answers (hence the term “Google-proof”).

The original GPT-4 model gets 35.7% of the GPQA Diamond questions correct, whereas Claude 3 Opus gets 50.4% correct. This sizable gap indicates that Claude 3 Opus may very well be the best chatbot on the market.

Besides testing for overall competence, Anthropic also tested for dangerous capabilities that could trigger more stringent safety and security protocols, according to the company’s fledgling framework for responding to catastrophic threats from anticipated future AI systems.

For instance, Anthropic tested whether Claude could download an openly available AI model and modify the model to exhibit harmful behaviors in response to a particular prompt. Claude was able to partially complete this task, but Claude failed to debug code for applying modifications using multiple AI chips simultaneously.

Anthropic also tested dangerous capabilities related to biological weapons and cyber attacks, and Claude struggled at these as well. Overall, Claude Opus poses risks akin to existing large-scale AI systems like GPT-4.

Although Claude Opus appears incapable of wreaking havoc, Anthropic’s CEO is concerned that future AI systems could pose grave threats. In a podcast interview last year, he gave a 10 to 25 percent chance that “something goes really quite catastrophically wrong on the scale of human civilization.”

Indeed, many Anthropic employees believe AI systems could cause terrible outcomes once these systems exceed human-level intelligence. Therein lies the paradox of Anthropic: the company is focused on developing safe, cutting-edge AI systems, while grappling with the unsettling possibility that even their best efforts may fail to prevent catastrophes from the systems they are heading towards.

The Scarcity of “Safe Harbors” for Studying AI Flaws

The last few months in AI have been an open season for open letters, with people rallying together to call for enacting deepfake legislation, using AI for good, and addressing existential threats.

The latest open letter highlights unnecessary barriers that prevent AI researchers from making progress towards more safe and trustworthy AI.

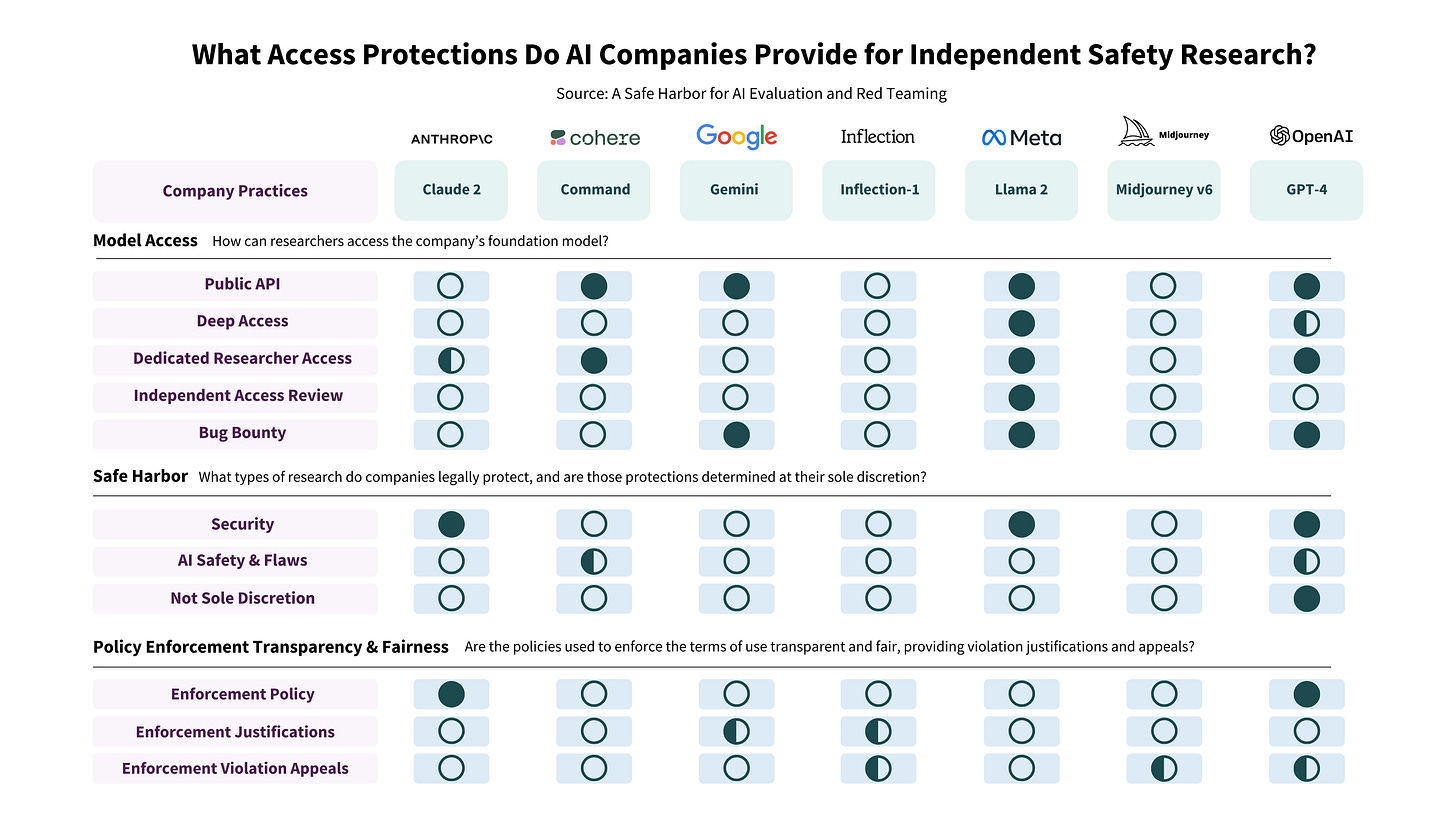

More specifically, many people agree on the importance of independently evaluating AI systems for risks. But researchers who wish to do such evaluation may face threats of account suspensions or even legal penalties from AI companies.

The letter calls for basic protections from companies that will allow researchers to effectively search for vulnerabilities in AI models. For instance, companies could commit to consider AI safety research to be “authorized” under the Computer Fraud and Abuse Act. Additionally, companies could offer a transparent process for appealing account suspensions.

AI firms could go further still. OpenAI, Google, and Meta already maintain bug bounty programs that explicitly reward researchers for good faith efforts to expose conventional cybersecurity vulnerabilities. AI companies could establish similar programs to financially incentivize the discovery of flaws in AI models.

Senators Aim to Advance International AI Standards

Senators Marsha Blackburn (R-TN) and Mark Warner (D-VA) have introduced the Promoting US Leadership in Standards Act of 2024 (press release, one-pager).

The bill would direct the National Institute of Standards and Technology (NIST) to establish a web portal that informs US stakeholders about ongoing efforts to develop technical standards for AI and other critical and emerging technologies (CETs). The portal would also highlight opportunities to participate in these standards-setting efforts.

Additionally, NIST would build a pilot program to award grants for entities hosting meetings to coordinate standards on AI and other CETs. This program would distribute millions of dollars in grants, since the bill would authorize $10M for NIST to carry out the bill’s assignments.

The Center for AI Policy supports this bill. AI’s global risks necessitate global coordination, and international standards are a critical part of that project.

News at CAIP

We released a memo explaining our perspective on the Musk vs Altman lawsuit and our expectations for tonight’s State of the Union Address. Check it out here.

Quote of the Week

You will not have a choice. Your adversaries are going to choose for you that you have to do this. [...]

We’re already on the way of machines will be fighting machines, robots will be fighting robots. It’s already happening.

—Lt. General Chris Donahue, commander of the 18th Airborne Corps, discussing the adoption of AI in warfighting

This edition was authored by Jakub Kraus.

If you have feedback to share, a story to suggest, or wish to share music recommendations, please drop me a note at jakub@aipolicy.us.

—Jakub