Welcome to AI Policy Weekly, a newsletter from the Center for AI Policy. Each issue explores three important developments in AI, curated specifically for AI policy professionals.

NVIDIA Expands the Hardware Frontier

Imagine if you had heard the following statements just two years ago, back in March 2022.

“What you’re about to enjoy is the world’s first concert where everything is homemade.”

“The ChatGPT moment for robotics may be right around the corner.”

“This is Diana; she is a digital human NIM. And you just talk to her, and she is connected in this case to Hippocratic AI’s large language model for healthcare. And it’s truly amazing. She is just super smart about healthcare things.”

In March 2022, unless you were paying close attention to AI, you might have suspected that the speaker was a crank, or an actor in a sci-fi movie.

But these are all real quotes from Nvidia CEO Jensen Huang—lavish leather jacket and all—at his company’s annual GPU Technology Conference (GTC).

Huang unveiled many advancements in AI hardware from Nvidia, which is now America’s third largest company by market capitalization, after its value more than tripled in the past year due to excitement about AI’s potential.

The essence of Nvidia’s latest product releases can be summarized in another Huang quote: “Nvidia doesn’t build chips. It builds data centers.”

For the uninitiated, a data center is a large, specialized warehouse of computers. Just as a power plant generates and distributes electricity to power homes and businesses, a data center generates and distributes computing resources to operate applications and digital services.

Nvidia's latest innovations center on constructing data centers optimized for developing and deploying AI systems at scale. At a high level, the innovations fall within two categories: tools for linking AI chips together in large quantities, and the AI chips themselves.

Concretely, Nvidia’s plan for building an AI data center “for the new industrial revolution” goes as follows:

Use the new “Blackwell” B200 chip, which is 2.25x faster than today’s leading AI chip, the H100 (also from Nvidia).

Link two Blackwell chips together into a GB200 superchip.

Link two superchips together into a tray (also known as a “node” or “server”).

Link eighteen trays together into a GB200 NVL72 rack.

Link eight racks together into a supercomputing cluster.

Populate a data center with dozens of these clusters.

In total, the data center that Huang outlined at the conference would contain 32,000 Blackwell chips.

The chips alone could be a billion-dollar investment, since Huang predicts they will retail between $30,000 and $40,000 apiece when they come to market later this year.

However, leading AI developers may be willing to pay that price for gains in efficiency and speed. At the conference, Huang explained how a 1.8-trillion-parameter “GPT model” (likely OpenAI’s GPT-4) required 25,000 A100 chips to train over the course of three to five months, but that the same training could be accomplished with only 2,000 Blackwell chips over three months.

Biggest CHIPS Act Investment Yet: Intel

Before this week, the US Commerce Department had used less than $2B of the $39B in manufacturing subsidies that Congress directed it to invest under the CHIPS Act. Most of this spending was a $1.5B award to GlobalFoundries for trailing-edge chips.

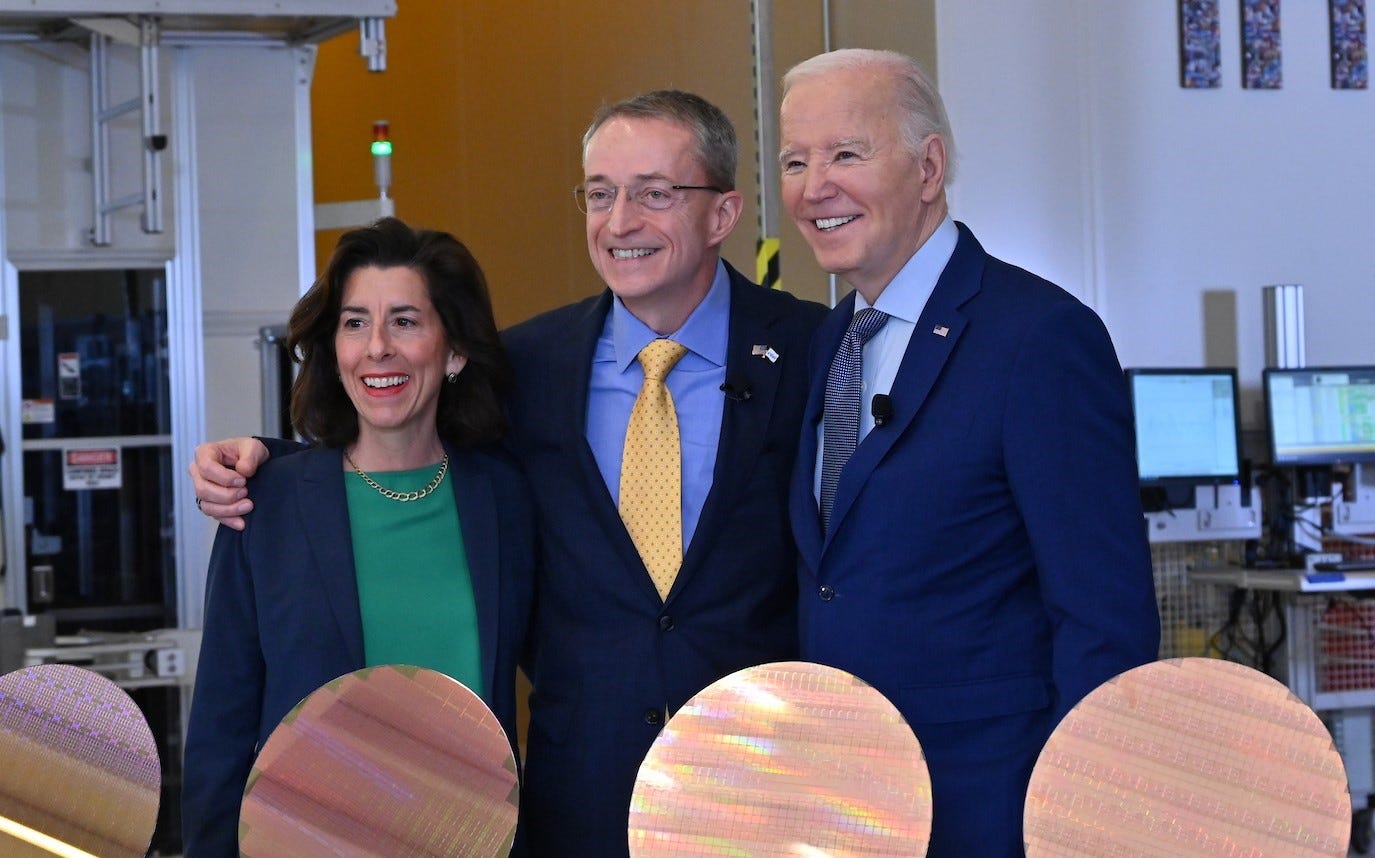

On Wednesday, the Commerce Department and Intel signed a non-binding preliminary memorandum of terms, proposing up to $8.5B in direct funding for Intel.

As part of the CHIPS deal, Intel also expects to receive “a U.S. Treasury Department Investment Tax Credit (ITC) of up to 25% on more than $100 billion in qualified investments and eligibility for federal loans up to $11 billion.”

This fiscal support would boost Intel's semiconductor manufacturing and research projects in Arizona, New Mexico, Ohio, and Oregon.

For example, Intel has been constructing two new leading-edge chip factories in Chandler, AZ since September 2021. Further, Intel has been building another pair of leading-edge factories in New Albany, OH since September 2022.

These investments are crucial in the era of AI, since the US currently produces only a small fraction of the world's most cutting-edge AI chips. (Nvidia is “fabless,” meaning it outsources the physical fabrication of its chips.)

New Bill Seeks to Protect Consumer Data From AI Training

The AI Consumer Opt-In, Notification Standards, and Ethical Norms for Training (AI CONSENT) Act, introduced by Senators Welch (D-VT) and Luján (D-NM), would require companies to disclose when consumers’ data will be used for AI training and to obtain consumers’ express opt-in consent before proceeding.

The bill directs the FTC to specify clear disclosure standards and guidelines for obtaining this permission. The process must be easy for consumers to use, allow them to freely grant or revoke permission, and cannot affect access to a company’s services.

Additionally, the AI CONSENT Act would mandate an FTC study to evaluate the feasibility of AI companies anonymizing user data to safeguard privacy.

News at CAIP

Save the date: we’re hosting another AI policy happy hour at Sonoma Restaurant & Wine Bar, from 5:30–7:30pm on Thursday, April 4th. Anyone working on AI policy or related topics is welcome to join.

Thomas Larsen coauthored a new research paper, which analyzes structured arguments that AI developers could make to demonstrate that their AI systems are safe.

Our latest blog post, led by Kate Forscey, compares AI to social media as a policy issue, highlights the risk of human enfeeblement from AI, and argues that Congress should act now to prevent societal-scale harms.

We launched the Center for AI Policy Podcast, which zooms into the strategic landscape of AI and unpacks its implications for US policy. Stay tuned for more episodes this Saturday.

Quote of the Week

We turned over exactly the stones that you’re turning over right now, and we think you will end up exactly where we are—not because we are smarter than everyone else but simply because we started earlier than everyone else.

—Dragoș Tudorache, Chair of the European Parliament's Special Committee on AI, commenting on the US potentially following Europe’s footsteps on AI regulation

This edition was authored by Jakub Kraus.

If you have feedback to share, a story to suggest, or wish to share music recommendations, please drop me a note at jakub@aipolicy.us.

—Jakub