AI Policy Weekly #18

Billion-dollar investments in global computing power, a bicameral data privacy bill, and a framework for sharing critical AI information

Welcome to AI Policy Weekly, a newsletter from the Center for AI Policy. Each issue explores three important developments in AI, curated specifically for AI policy professionals.

Companies and Nations Pour Billions into AI and Chips

In AI, one week can contain several multi-billion-dollar investments.

Canadian Prime Minister Justin Trudeau's pledge to spend $1.8B USD ($2.4B CAD) in AI technology may have significant ramifications for AI safety.

Most of the money would strengthen computing resources to enable further AI development, but $50M CAD ($37M USD) would go towards creating a new Canadian AI Safety Institute. That’s 3.7 times what the United States invested into its own AI safety institute this year.

In the US, the Commerce Department signed a nonbinding agreement to send $6.6B of CHIPS Act funding to the Taiwan Semiconductor Manufacturing Company (TSMC), which physically manufactures the top AI chips from Nvidia, AMD, Meta, Intel, and more. TSMC will also get a hefty investment tax credit and up to $5B in loans.

TSMC already had plans to build two manufacturing facilities in Phoenix, and it announced plans to build a third, spicing up the CHIPS funding news. What’s notable about these factories is that they will produce some of the most advanced chips currently possible.

However, the TSMC factories will take some time to come online: the first plant will begin large-scale production in 2025, the second will start in 2028 (if all goes well), and the third will start “by the end of the decade.”

Other countries are making their own investments to lead in AI and cutting-edge chips. One such country is South Korea, where President Yoon Suk Yeol declared plans to invest ₩9.4T ($6.9B) in AI and AI semiconductors by 2027, plus another ₩1.4T ($1B) specifically dedicated to growing “innovative AI semiconductor companies.” In AI hardware, South Korea already dominates in High-Bandwidth Memory (HBM) thanks to leading companies Samsung and SK hynix.

Further, SK hynix announced its own $3.87B AI investment last week: West Lafayette, Indiana will welcome a new packaging facility from the company.

“SK hynix will soon be a household name in Indiana,” commented US Senator Todd Young (R-IN).

In case Canada, USA, and South Korea weren’t enough, Microsoft unveiled plans to expand in Japan with a $2.9B investment over two years to support AI, cloud computing, data centers, and more.

With billion-dollar investments announced weekly, the AI landscape is evolving at a rapid pace.

Comprehensive Data Privacy Proposal Enters Congress

“They're very similar. But helpful to remember that humans share 98% of our DNA with chimps.”

That’s how privacy legislation expert Keir Lamont compared the American Privacy Rights Act (APRA) to its predecessor, the American Data Privacy and Protection Act (ADPPA), which failed to pass last Congress.

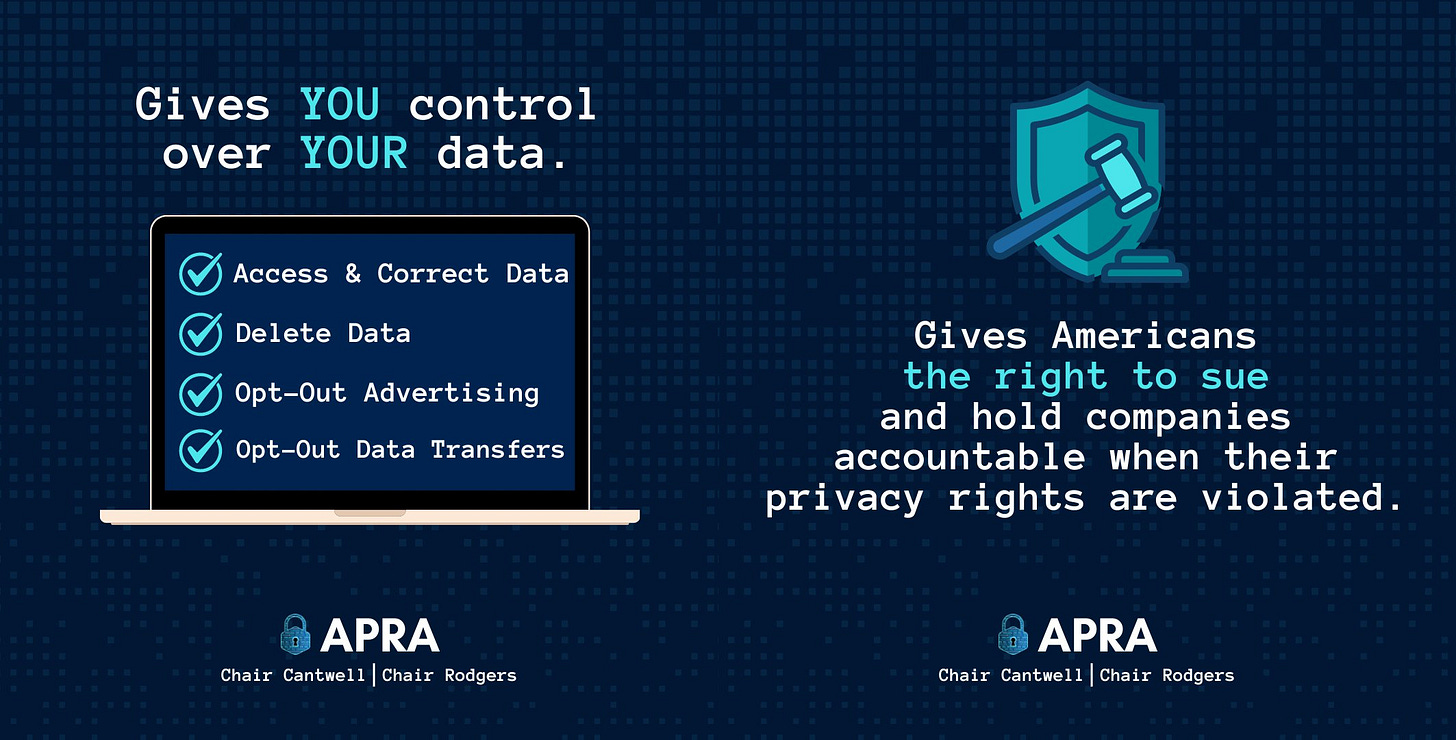

The APRA comes from a bicameral, bipartisan pair of Washington policymakers chairing the US Senate and House Commerce committees: Senator Maria Cantwell (D-WA) and Representative Cathy McMorris Rodgers (R-WA). It also received some support from Microsoft.

Some of APRA’s changes from ADPPA include stronger private rights of action, a pilot program promoting privacy-enhancing technology, and consumer rights to opt-out from certain practices.

A few aspects of the text stand out for their effects on the AI industry.

First, the bill would make it harder for AI companies to collect user data for training new AI systems. For example, users would have the right to delete data that identifies them, and opt out of companies transferring that data to other parties.

Second, the data security requirements, which include routine assessments of vulnerabilities, could encourage better cybersecurity practices in general at AI companies, including for securing model weights.

Third, large data holders would need to conduct annual impact assessments of algorithms posing a consequential risk of harm. This would affect major social media platforms, which often use AI algorithms to curate content.

Although APRA is still a discussion draft, the House has already scheduled a hearing next Wednesday to examine it in greater detail, along with other data privacy proposals like the Kids Online Safety Act (KOSA).

Researchers Recommend Information Sharing to Promote Safer AI

Researchers from Google DeepMind, GovAI, the University of Toronto, and elsewhere collaborated to publish a new paper that explores promising information exchange practices to reduce risks of cutting-edge AI.

One reason these practices are necessary is that AI is evolving rapidly, and up-to-date information can spell the difference between effective and ineffective government oversight.

Specifically, AI companies could share information with government actors such as the architecture, computational resources, and data used in training. AI companies could also share information with other AI developers and independent domain experts in areas like risk assessment results, dangerous capability evaluations, and technical safeguards.

Even more specifically, the paper outlines what an information sharing ecosystem might look like for AI risks related to cyber attacks and biological weapons.

Finally, the researchers outline promising practices for smoothing the implementation of these mechanisms, such as establishing safe harbors to protect companies from legal liability following information disclosures, expanding the government’s technical AI talent, and anonymizing certain disclosed information.

One clever idea is that in the absence of enforceable regulations, companies could credibly pre-commit to information sharing by making an upfront payment that they incrementally recoup as they follow through on their promise.

News at CAIP

We’re hiring for three different roles: External Affairs Director, Government Relations Director, and Senior Policy Analyst or Economist.

We released our model legislation, the Responsible Advanced Artificial Intelligence Act of 2024 (RAAIA). See our press release, and Politico’s coverage in Morning Tech.

Our new blog post argues that “There’s Nothing Hypothetical About Genius-Level AI.”

The latest episode of the Center for AI Policy Podcast features Jeffrey Ladish, Executive Director of Palisade Research.

From 11am–12pm on Tuesday, April 23rd, we will host a moderated discussion on AI, Automation, and the Workforce in SVC 212 inside the Capitol Visitor Center. The speakers will be Professor Simon Johnson of MIT and Professor Robin Hanson of GMU. To attend, fill out an RSVP using this form.

Quote of the Week

AI models have hoovered up the entire sum of the human experience that we’ve accomplished over thousands of years. And now, we just hand it off to be their prompt engineers?

—Jon Stewart, host of The Daily Show, in a segment on AI and the future of work

This edition was authored by Jakub Kraus.

If you have feedback to share, a story to suggest, or wish to share music recommendations, please drop me a note at jakub@aipolicy.us.

—Jakub