AI Policy Weekly #32

OpenAI’s rushed testing and whistleblower scrutiny, the DOE AI Act, and the LLM4HWDesign contest

Welcome to AI Policy Weekly, a newsletter from the Center for AI Policy. Each issue explores three important developments in AI, curated specifically for US AI policy professionals.

OpenAI Rushes Safety Testing and Faces Whistleblower Allegations

Microsoft CEO Satya Nadella recently told the New York Times that one of his favorite qualities about OpenAI CEO Sam Altman is that “every day he’s calling me and saying ‘I need more, I need more, I need more.’”

Based on recent reporting from the Washington Post, one of these daily needs may be to release products with greater speed. Ahead of the May launch of GPT-4o, “some members of OpenAI’s safety team felt pressured to speed through a new testing protocol, designed to prevent the technology from causing catastrophic harm.”

The testers had one week to conduct all their evaluations, which was barely enough time to complete the tests.

OpenAI spokesperson Lindsey Held said “[we] didn’t cut corners on our safety process, though we recognize the launch was stressful for our teams.”

“The process was intense,” said a member of OpenAI’s preparedness team for catastrophic risks. “After that, we said, ‘Let’s not do it again.’”

Last year, OpenAI made voluntary commitments to the White House to test its models for dangerous capabilities such as cyber attacks, self replication, and biological misuse. It also made voluntary safety commitments at the AI Seoul Summit this year.

The result of all these voluntary commitments was a single rushed week of safety testing.

This demonstrates the limitations of voluntary commitments, and the need for safety requirements like the Center for AI Policy’s model legislation.

OpenAI separately made news this week for potentially violating SEC whistleblower protections. Several OpenAI whistleblowers filed a formal complaint against OpenAI with the SEC.

The employees also sent a letter to SEC Chair Gary Gensler and Iowa Senator Chuck Grassley (R-IA), alleging violations such as:

using non-disparagement clauses that lacked exemptions for disclosing securities violations,

requiring company consent before disclosing confidential information to federal authorities,

using confidentiality clauses in agreements containing securities violations,

requiring employees to “waive compensation that was intended by Congress to incentivize reporting and provide financial relief to whistleblowers.”

“OpenAI's nondisclosure agreements must change,” said Senator Grassley, co-founder and co-chair of the Senate Whistleblower Protection Caucus, in response to the letter.

“Monitoring and mitigating the threats posed by AI is a part of Congress’s constitutional responsibility to protect our national security, and whistleblowers will be essential to that task.”

The Center for AI Policy strongly agrees with Senator Grassley. That’s why our 2024 AI Action Plan calls for whistleblower protections at AI companies.

Manchin, Murkowski Introduce the DOE AI Act

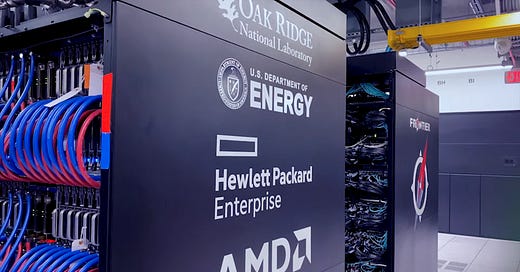

Senators Joe Manchin (I-WV) and Lisa Murkowski (R-AK) recently introduced S.4664, the Department of Energy (DOE) AI Act.

The bill would formally establish the Frontiers in AI for Science, Security, and Technology (FASST) program at the DOE.

FASST would work on datasets, computing platforms, and AI models in order to support the DOE’s mission.

For example, FASST would develop “best-in-class AI foundation models” and research “scientific disciplines needed for frontier AI.” It would also research safety-relevant topics, such as AI alignment to “make AI systems behave in line with human intentions.”

Additionally, the bill would formally establish the Office of Critical and Emerging Technologies at the DOE, with a mission to assess US competitiveness, prepare for “technological surprise,” and support policy decisionmaking on emerging technologies.

Further, the bill would establish at least eight AI R&D Centers at the National Laboratories, with annual authorized funding of at least $30 million per Center.

Overall, many parts of the legislation seek to accelerate AI creation and adoption.

Nonetheless, the bill repeatedly emphasizes the need for AI to be safe, secure, and trustworthy. For instance, it would establish an AI risk and evaluation program targeting AI threats related to deception, autonomy, malware, critical infrastructure, and chemical, biological, radiological, and nuclear (CBRN) hazards.

The Center for AI Policy is pleased to see the DOE AI Act supporting safety research and taking safety seriously. But even if the bill passes, America will still need comprehensive safety requirements for private AI developers.

New Contest Aims to Use AI to Accelerate Hardware Design

“Better GPUs → more intelligence per unit training time → better coding LLMs → design even better GPUs.”

That’s how Jim Fan, Senior Research Manager at NVIDIA, described NVIDIA’s interest in automating the design of computer chips.

“Some day, we can take a vacation and NVIDIA will keep shipping new chips,” said Fan in a post on X.

Fan linked to the new Large Language Model (LLM)-Assisted Hardware Code Generation (LLM4HWDesign) contest, which seeks to “develop an open-source, large-scale, and high-quality dataset” so that generative AI models can further automate hardware development.

The contest is sponsored by NVIDIA and the National Science Foundation.

Participants will collect human-generated and AI-generated code data, filter out low-quality data, and design data descriptions and labels that help LLMs learn to write code for hardware design projects.

This contest reflects the increasing importance of AI in hardware development. Indeed, NVIDIA’s top AI chips already use thousands of AI-designed circuits.

Shankar Krishnamoorthy, general manager of the electronic design automation (EDA) group at Synopsys, recently told the Wall Street Journal that “the ability to autonomously create a functional chip using generative AI” may be just five years away.

The process of AI designing AI hardware is a clear example of AI improving AI, a feedback loop that will become increasingly visible as AI grows more capable. As a result, AI progress could get even faster in the coming years.

News at CAIP

Jason Green-Lowe wrote a blog post responding to OpenAI’s undisclosed security breach.

Claudia Wilson wrote a blog post about whistleblowers’ allegations of SEC violations at OpenAI.

Brian Waldrip wrote a blog post about NATO’s updated AI strategy, which emphasizes the importance of safety and responsibility and AI development.

Claudia Wilson wrote a blog post about the Zambia copper discovery. This is an important example of AI accelerating AI research.

On Wednesday, July 24th from 5:30–7:30 p.m. ET, we are hosting an AI policy happy hour at Sonoma Restaurant & Wine Bar. Anyone working on AI policy or related topics is welcome to attend. RSVP here.

On Monday, July 29th at 3 p.m. ET, the Center for AI Policy is hosting a webinar titled “Autonomous Weapons and Human Control: Shaping AI Policy for a Secure Future.” The event will feature a presentation and audience Q&A with Professor Stuart Russell, a leading researcher in artificial intelligence and the author (with Peter Norvig) of the standard text in the field. RSVP here.

Quote of the Week

Creating highly tailored propaganda is now fast and easy. Russia proved that AI can create realistic-seeming personas, drive content at scale, and trick platforms into believing personas are not bots at all. A group of allies caught this effort and seized the relevant domains, but not until the work had been underway for two years. In another two years, the state of the art in AI will be such that a bot can identify the messages that resonate best with a micropopulation and then feed that population what they want to hear. The payload of information will feel as local and genuine as a conversation over the fence with a neighbor.

—Emily Harding, Director of the Intelligence, National Security, and Technology Program at CSIS, in an article analyzing the Justice Department’s efforts to disrupt an AI-powered Russian bot farm

This edition was authored by Jakub Kraus.

If you have feedback to share, a story to suggest, or wish to share music recommendations, please drop me a note at jakub@aipolicy.us.

—Jakub