AI Policy Weekly #45

Transparency policy proposals, Muah data breach, and AI’s new sense of taste

Welcome to AI Policy Weekly, a newsletter from the Center for AI Policy. Each issue explores three important developments in AI, curated specifically for U.S. AI policy professionals.

SB 1047 Supporter and Opponent Find Common Ground: Transparency

California Governor Gavin Newsom recently vetoed SB 1047, a high-profile bill that would have created safety and security requirements for AI models costing over $100 million to train.

“This was America’s first major public conversation about AI policy, but it will not be our last,” write Daniel Kokotajlo and Dean Ball, who respectively supported and opposed the legislation, in a new op-ed in TIME.

Despite their significant divergence on SB 1047, the pair commendably collaborated to identify proposals that they could both support. Their op-ed outlines a framework with four measures for increasing visibility into the development of broadly capable AI systems like ChatGPT.

Their first recommendation is that “when a frontier lab first observes that a novel and major capability (as measured by the lab’s own safety plan) has been reached, the public should be informed.” The U.S. AI Safety Institute could facilitate this process.

At the moment, most AI capabilities become public only after an AI system is announced or released, which can be several months after its creation. Additionally, some capabilities go totally unreported, in part because companies lack comprehensive techniques for confidently evaluating dangerous capabilities in their general-purpose AI systems.

Kokotajlo and Ball’s second recommendation is to have companies share what behaviors they aim to instill in their AI systems, citing documents like Anthropic’s constitution, OpenAI’s model spec, and Anthropic’s system prompts. Publishing this information would help advance AI alignment research, crowdsource potential improvements to company plans, and give Americans knowledge of the principles behind increasingly ubiquitous AI systems.

Specifically, the duo “support regulation, voluntary commitments, or industry standards that create an expectation that documents like these be created and shared with the public.”

Their third recommendation is for frontier AI companies to “publish their safety cases—their explanations of how their models will pursue the intended goals and obey the intended principles or at least their justifications for why their models won’t cause catastrophe.”

The term “safety cases” sparked some ensuing debate between AI experts on social media, as the term has a different meaning in other safety-critical industries like nuclear energy. Regardless, the general intent of this proposal is that cutting-edge AI companies should be responsible for demonstrating that their systems are safe.

The final recommendation is to protect whistleblowers who report on illegal activities and extreme AI risks. Ball and Kokotajlo suggest using the whistleblower protections from SB 1047 “as the starting point for further discussion.”

The Center for AI Policy strongly supports this call for whistleblower protections, which we previously outlined as a core priority for Congress in our 2024 AI Action Plan.

AI Romance Platform Suffers Major Data Breach

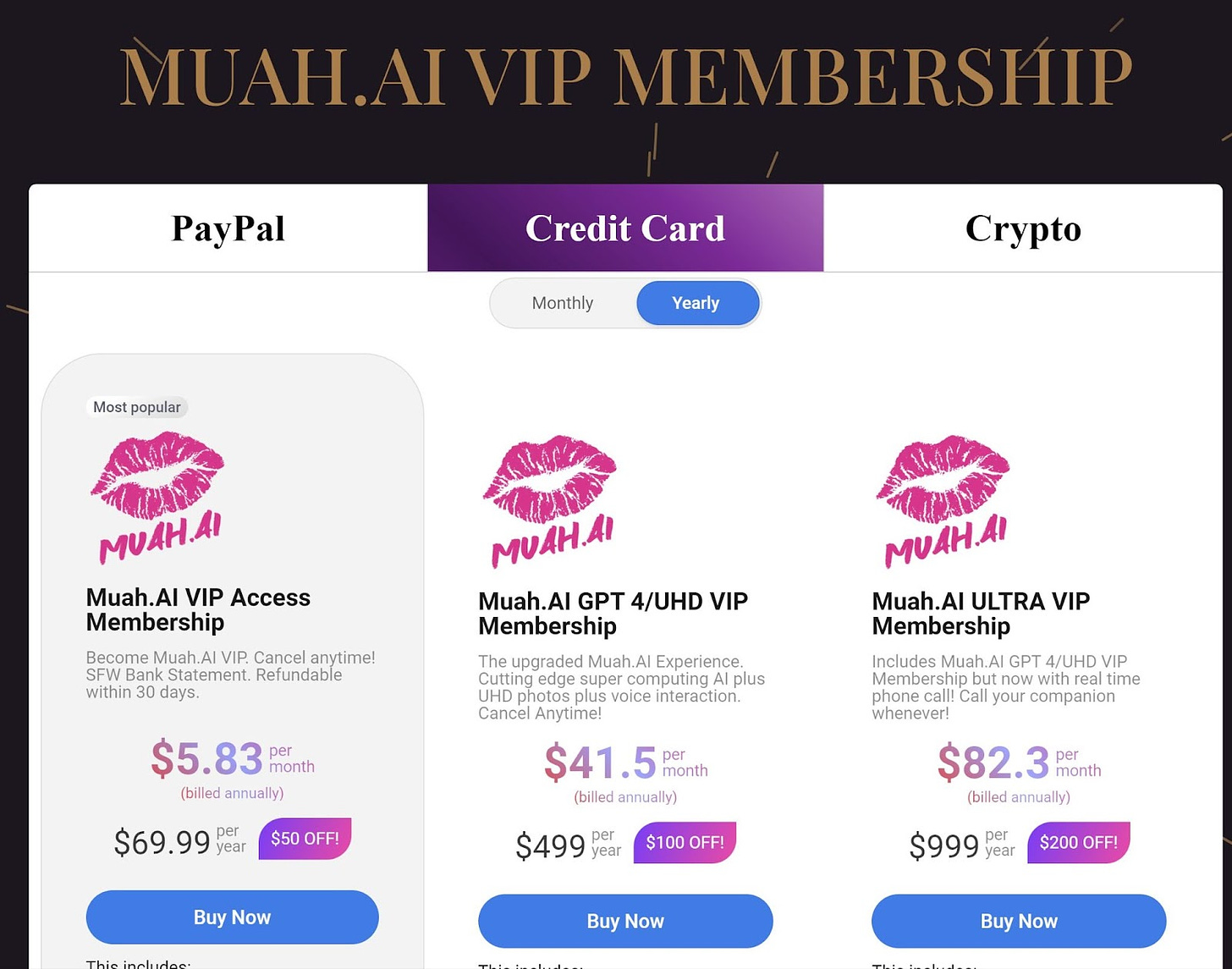

Muah AI, a small AI company selling romantic AI chatbot services, recently experienced a significant data breach, exposing approximately 1.9 million email addresses and associated user prompts.

Security researcher Troy Hunt found that many email addresses appeared to be linked to real identities, potentially exposing users to risks of extortion or reputational damage. For example, a hacker could blackmail a victim of the breach into sharing trade secrets from their employer.

Muah AI claimed the breach was orchestrated by competitors, but provided no evidence for this claim. They also stated that user chats and photos are periodically wiped, and that they securely store payment details.

Muah’s security vulnerabilities are concerningly common in the romantic AI chatbot industry. Mozilla reviewed several such services earlier this year, finding that many of them use inadequate privacy and security practices, like selling personal data and allowing weak passwords.

Disturbingly, thousands of accounts appear to have requested child sexual abuse material from Muah’s platform. This abhorrent behavior demonstrates a general principle: when an AI tool is made available to millions of people, some of them will use it in ways that are surprisingly disturbing or antisocial.

In response, Muah AI announced plans to implement an experimental system to automatically suspend users determined to be abusive, though they acknowledged challenges in accurately detecting abuse.

The Muah AI incident highlights serious concerns about data security, user privacy, user behavior, and content moderation in romantic AI chatbot services.

AI Gains a New Sense of Taste

Researchers from Penn State and NASA have published a paper in Nature introducing a new way to detect and measure chemicals using a combination of tiny electrical sensors and artificial intelligence. This is part of an effort to build an “electronic tongue.”

The sensors are ion-sensitive field-effect transistors (ISFETs) with a sensing material based on graphene, a form of carbon atoms arranged in a honeycomb pattern. Specifically, graphene-based ISFETs detect changes in the chemical composition of liquids by converting those changes into measurable electrical signals.

The researchers connected these sensors to a computer and used them to collect data on various liquids, like different types of milk, soft drinks, and fruit juices.

Next, they trained AI models on the sensor data, building a system that could:

identify different types of drinks (like distinguishing between Coke and Pepsi),

detect if liquid has been tampered with (like water added to milk),

check if a drink is still fresh,

identify potentially harmful chemicals in very small amounts.

Thus, the AI models acquired a limited, data-driven analog to taste perception.

Given that the latest variants of ChatGPT can already process images (sight) and audio (hearing), AI is steadily progressing towards wielding all five basic human senses.

Upcoming CAIP Event

On Tuesday, October 22nd from 6–8 p.m. ET, the Center for AI Policy is hosting an AI policy happy hour at Sonoma Restaurant (223 Pennsylvania Ave SE) in Washington, DC.

Anyone working on AI policy or related topics (semiconductors, cybersecurity, data privacy, etc.) is welcome to attend.

If you’d like to stop by, please RSVP here.

News at CAIP

Jason Green-Lowe wrote a letter to the editor responding to the Financial Times editorial on SB 1047.

Jason Green-Lowe wrote a blog post about the latest public opinion polls on AI. Many voters prefer a careful, safety-focused approach to AI development over a strategy emphasizing speed and geopolitical competition.

Makeda Heman-Ackah and Jason Green-Lowe wrote a blog post about AI agents: “The Risks and Rewards of AI Agents Cut Across All Industries.”

Claudia Wilson wrote a blog post responding to the 2024 Nobel Prize winners in chemistry and physics: “Both Nobel Laureates and Everyday Americans Recognize the Need for AI Safety.”

Quote of the Week

The litmus test for how good a person you are is if you are nice to a waiter.

In the future, it’ll be how kind you are to your AI companion.

—Alana O’Grady Lauk, an executive at the tech startup Verkada, discussing AI interaction etiquette

This edition was authored by Jakub Kraus.

If you have feedback to share, a story to suggest, or wish to share music recommendations, please drop me a note at jakub@aipolicy.us.

—Jakub

Question: in terms of the EU AI Act and what we now know of Muah AI, would this be a Forbidden or High Risk technology? What's your rationale?