Welcome to AI Policy Weekly, a newsletter from the Center for AI Policy. Each issue explores three important developments in AI, curated specifically for U.S. AI policy professionals.

Anthropic Teaches Claude to Use Computers

Two years ago, the AI startup Adept unveiled an AI prototype that could autonomously complete simple tasks on the internet, like modifying an Excel spreadsheet or finding a cheap refrigerator on Craigslist, in response to textual prompts.

“Today’s user interfaces will soon seem as archaic as landline phones do to smartphone users,” Adept declared, predicting that people would soon be able to interact with computers in natural language.

Flash forward to today, where hundreds of millions of people are regularly conversing with AI chatbots, and Adept’s ambitious, pre-ChatGPT proclamation seems decreasingly far-fetched.

Just this week, the leading AI company Anthropic released an upgraded Claude 3.5 Sonnet model that can use computers as humans do—analyzing screenshots, moving cursors, and typing text to operate standard software interfaces. For example, it can order a pizza and plan a trip to watch the San Francisco sunrise.

On OSWorld, a benchmark for computer interaction, Sonnet achieves approximately 15% success with screenshots alone, and 22% when “afforded more steps to complete the task.” While well below human performance of 70–75%, these results substantially exceed the previous AI record of 8%.

Anthropic conducted pre-deployment testing of the upgraded Sonnet model with both the U.S. and UK AI Safety Institutes, but it’s unclear whether these government bodies had access to the computer use version.

After testing, Anthropic concluded that “the updated Claude 3.5 Sonnet, including its new computer use skill, remains at AI Safety Level 2” in the company’s updated framework for mitigating catastrophic AI risks. This designation suggests that while Claude’s new computer skills mark a significant advance, they don’t cross key risk thresholds that would require enhanced safety measures.

Nonetheless, AI systems can already damage people’s devices when given unrestricted freedom. Buck Shlegeris, CEO of an AI safety nonprofit, let an earlier version of Claude run wild on his laptop for amusement. “Unfortunately,” he announced afterwards, “the computer no longer boots.”

For reasons like this, Anthropic recommends using Claude in isolated environments with limited privileges and data access, while maintaining human oversight for consequential decisions. But concerningly, Claude will sometimes take an undesirable action “even if it conflicts with the user’s instructions.”

While AI’s current computer capabilities may seem basic, they hint at a future where the line between human and AI capabilities continues to blur, elevating both opportunities and risks.

South Korea Plans AI Safety Institute

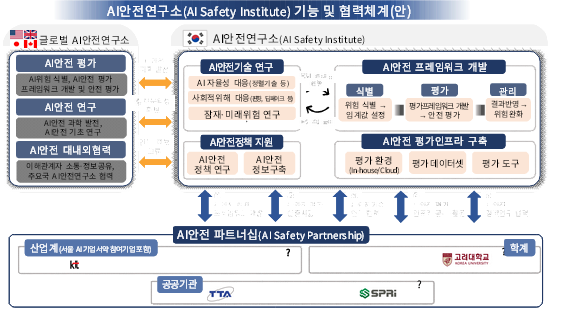

South Korea’s Ministry of Science and ICT announced plans to launch the country’s AI Safety Research Institute next month, in line with remarks by President Yoon Suk Yeol at the AI Seoul Summit in May.

The institute, housed within the Electronics and Telecommunications Research Institute (ETRI), will operate from the Pangyo Global R&D Center. Starting with 10 researchers and additional seconded personnel, it aims to hire more staff next year and grow to 30 employees.

South Korea’s institute will focus on four core areas:

Safety evaluations to assess AI’s risks in areas like biological misuse and evading human oversight, including the development of infrastructure for conducting evaluations.

Policy research to analyze governance efforts, study national security vulnerabilities, support the establishment of safety policies, and more.

Domestic and international cooperation to train personnel, share information, promote interoperability, and serve as a safety hub.

Technical safety research to address risks related to loss of control, societal bias, and unknown risks, including scenario analysis for artificial general intelligence (AGI).

By tackling these challenges, South Korea aims to position itself as a leader in ensuring that the rapid advancement of AI technology is matched by equally robust safety progress.

Microsoft Presses Forward on AI Agents

Microsoft is continuing to upgrade its offerings for customers seeking to build and deploy AI “agents” that can operate more autonomously than traditional chatbots, with less human prompting and handholding.

The tech giant is introducing ten new AI agents focused on business processes like lead qualification and customer service, as well as tools for building and customizing AI agents in Copilot Studio. Externally, firms like McKinsey and Clifford Chance are already using these autonomous systems.

“Think of agents as the new apps for an AI-powered world,” writes Microsoft. In the future, “every organization will have a constellation of agents—ranging from simple prompt-and-response to fully autonomous.”

Meanwhile, Microsoft’s competitors are pursuing distinct strategies: Amazon is contemplating shopping agents capable of autonomous purchasing within user-defined budgets, while Google’s Project Astra aims to create a “universal AI agent” that operates in multiple modalities.

Today’s AI agents are used for fairly rudimentary tasks. As AI keeps improving, time will tell whether Microsoft’s vision of a “constellation of agents” comes to life.

News at CAIP

Claudia Wilson wrote a blog post titled “AI Companions: Too Close for Comfort?”

Mark Reddish wrote a blog post with a recommendation for the first meeting of global AI safety institutes: reclaim safety as the focus of international conversations on AI.

Jason Green-Lowe wrote a blog post on the FTC final rule banning fake AI reviews: “Fake AI Reviews Are the First Step on a Slippery Slope to an AI-Driven Economy.”

We joined a coalition calling on Congress to authorize the U.S. AI Safety Institute.

Ep. 12 of the CAIP Podcast features Dr. Michael K. Cohen, a postdoc AI safety researcher at UC Berkeley. Jakub and Michael discuss OpenAI’s superalignment research, policy proposals to address long-term planning agents, academic discourse on AI risks, California’s SB 1047 bill, and more. To tune in, read the transcript, or peruse relevant links, visit the CAIP Podcast Substack.

Quote of the Week

I’ve grown not to entirely trust people who are not at least slightly demoralized by some of the more recent AI achievements.

—Tyler Cowen, an author, podcaster, Bloomberg Opinion columnist, host of the Marginal Revolution blog, and professor of economics at George Mason University

This edition was authored by Jakub Kraus.

If you have feedback to share, a story to suggest, or wish to share music recommendations, please drop me a note at jakub@aipolicy.us.

—Jakub