AI Policy Weekly #49

FrontierMath, Ai-Da’s $1 million painting, and cybersecurity bug bounty programs

Welcome to AI Policy Weekly, a newsletter from the Center for AI Policy. Each issue explores three important developments in AI, curated specifically for U.S. AI policy professionals.

Extremely Difficult Math Test Stumps Current AI

Researchers at Epoch AI have introduced FrontierMath, a benchmark that will help measure the dwindling gap between AI and human mathematical reasoning.

Leading AI models excel at existing math tests like GSM8K and MATH, but these benchmarks contain problems at more of a high school and early undergraduate level. In contrast, top AI models solve less than 2% of FrontierMath problems, which can require hours of effort from expert mathematicians.

Over 60 mathematicians collaborated to create FrontierMath, which contains hundreds of new problems from diverse areas of math.

Every problem was reviewed by expert mathematicians to verify it was solved correctly, clearly worded, resistant to guessing, and appropriately rated for difficulty.

To keep the problems from leaking into future AI training data, they were often stored in password-protected files and shared through secure communication channels.

FrontierMath problems can require multiple pages of advanced math to solve. According to world-renowned mathematician Terence Tao, they are “extremely challenging.”

“I think that in the near term basically the only way to solve them, short of having a real domain expert in the area, is by a combination of a semi-expert like a graduate student in a related field, maybe paired with some combination of a modern AI and lots of other algebra packages.”

Current AI models are stumped, scoring below 2%. Tao expects that FrontierMath will “resist AIs for several years at least.”

Meanwhile, Anthropic co-founder Jack Clark predicts that “an AI system working on its own will get 80% on FrontierMath by 2028.”

With AI systems approaching perfect scores on simpler tests, FrontierMath establishes a new standard for evaluating AI’s advanced mathematical prowess.

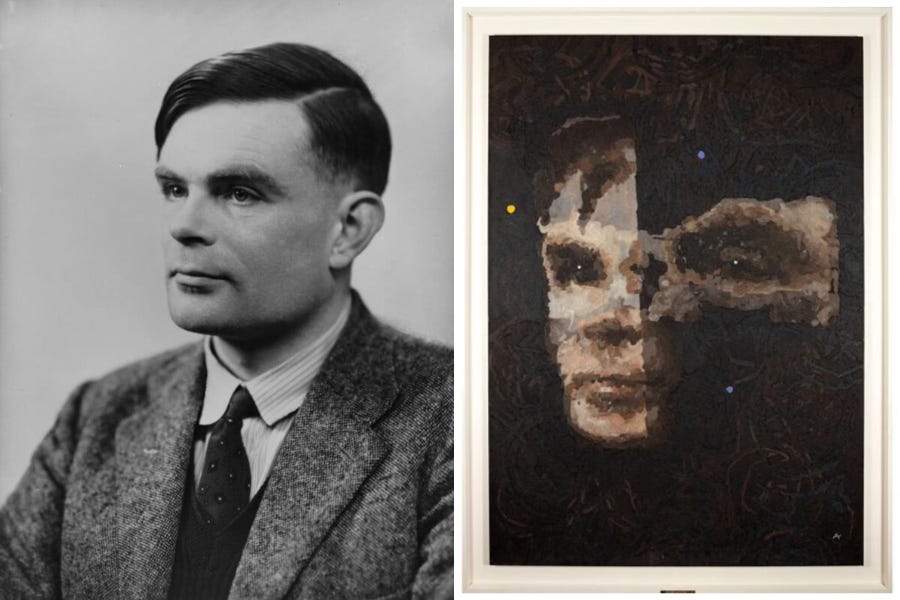

Robot’s Artwork Fetches $1.1 Million at Auction

A humanoid robot artist named Ai-Da helped create a portrait that recently sold for $1.1 million in an auction at Sotheby’s.

The piece, titled AI God, pays tribute to the pioneering British mathematician and computer scientist Alan Turing, whose work laid the foundation for modern computing and artificial intelligence.

The portrait dramatically exceeded its pre-sale estimate of $120,000-$180,000, drawing 27 bids before selling to an anonymous U.S. collector.

Its creation involved a sophisticated process: after choosing to paint Turing and sketching initial ideas, Ai-Da used her camera eyes and robotic arm to create 15 separate paintings of Turing’s face, each taking 6–8 hours and using up to 10 colors in both oil and acrylic paint.

Finally, humans used computer graphics to combine several of these works into the final composition “based on a discussion with Ai-Da (using her language model) about what she wants the final artwork to look like.”

In other news, the Beatles’ AI-assisted song “Now and Then” recently received two Grammy nominations. Advancements like these underscore the ongoing fusion of human and machine creativity.

Google DeepMind Employees Advocate for AI Bug Bounty Programs

Google DeepMind’s AI policy employees maintain a blog called “AI Policy Perspectives” for discussing AI governance topics in their personal capacity.

Recently, several employees wrote an essay titled “Securing AI,” which explores cybersecurity “bug bounty” programs and how similar initiatives could address AI security vulnerabilities.

Bug bounty programs offer prizes to security researchers who discover and responsibly report technical vulnerabilities in software systems. The DeepMind employees write that these programs “have seen enormous success over the years,” with companies like Google and Meta paying out millions of dollars to successful, helpful bounty hunters.

The essay discusses how AI is unlike traditional software. For example, today’s chatbots can take just about any sentence in any language as an input, provided that there’s enough space.

Nonetheless, bounty-style programs could clearly target AI issues like prompt injection, adversarial attacks, and model theft. Indeed, the Department of Defense already launched an AI bounty program earlier this year, likely responding to a directive in the FY2024 National Defense Authorization Act (NDAA).

To strengthen AI security, the DeepMind employees recommend considering a mix of public and private sector measures. They advocate for AI systems built “secure by design,” government-industry collaboration on vulnerability classification frameworks and defensive techniques, potential insurance-based incentives for bug bounty participation, and collaborative programs to connect AI research institutions with cybersecurity experts.

Congress is already taking action on AI security in bills like the bipartisan AI Incident Reporting and Security Enhancement Act, which has passed out of the House Science Committee and resembles the Senate’s bipartisan Secure AI Act. As AI security is an urgent issue, the Center for AI Policy calls on Congress to merge these two bills into a single law for the President to sign.

News at CAIP

Tristan Williams published CAIP’s latest research report on AI and music, titled “Composing the Future: AI’s Role in Transforming Music.”

This report accompanied our panel discussion today in the Rayburn House Office Building on AI and the future of music. Stay tuned (no pun intended) for a video recording.

Claudia Wilson published an article on U.S.-China competition in Tech Policy Press: “The US Can Win Without Compromising AI Safety.”

Claudia Wilson led CAIP’s response to the Department of Energy on the Frontiers in AI for Science, Security, and Technology (FASST) Initiative.

WIRED quoted CAIP in an article titled “What Donald Trump’s Win Will Mean for Big Tech.”

InvestorPlace referenced CAIP in an article: “Data Centers and the AI Revolution… and How Trump Could Ignite a Second AI Boom”

Jason Green-Lowe spoke on an AI policy panel for the University of Chicago’s Alumni in AI group.

ICYMI: Jason Green-Lowe spoke about federal AI legislation at Harvard’s Berkman Klein Center for Internet and Society. Slides are available here.

Quote of the Week

As the systems get more capable, this stuff gets more stressful.

—Amanda Askell, a researcher at Anthropic working on tuning and aligning AI models, describing the sense of responsibility that comes with her work

This edition was authored by Jakub Kraus.

If you have feedback to share, a story to suggest, or wish to share music recommendations, please drop me a note at jakub@aipolicy.us.

—Jakub