Welcome to AI Policy Weekly, a newsletter from the Center for AI Policy. Each issue explores three important developments in AI, curated specifically for U.S. AI policy professionals.

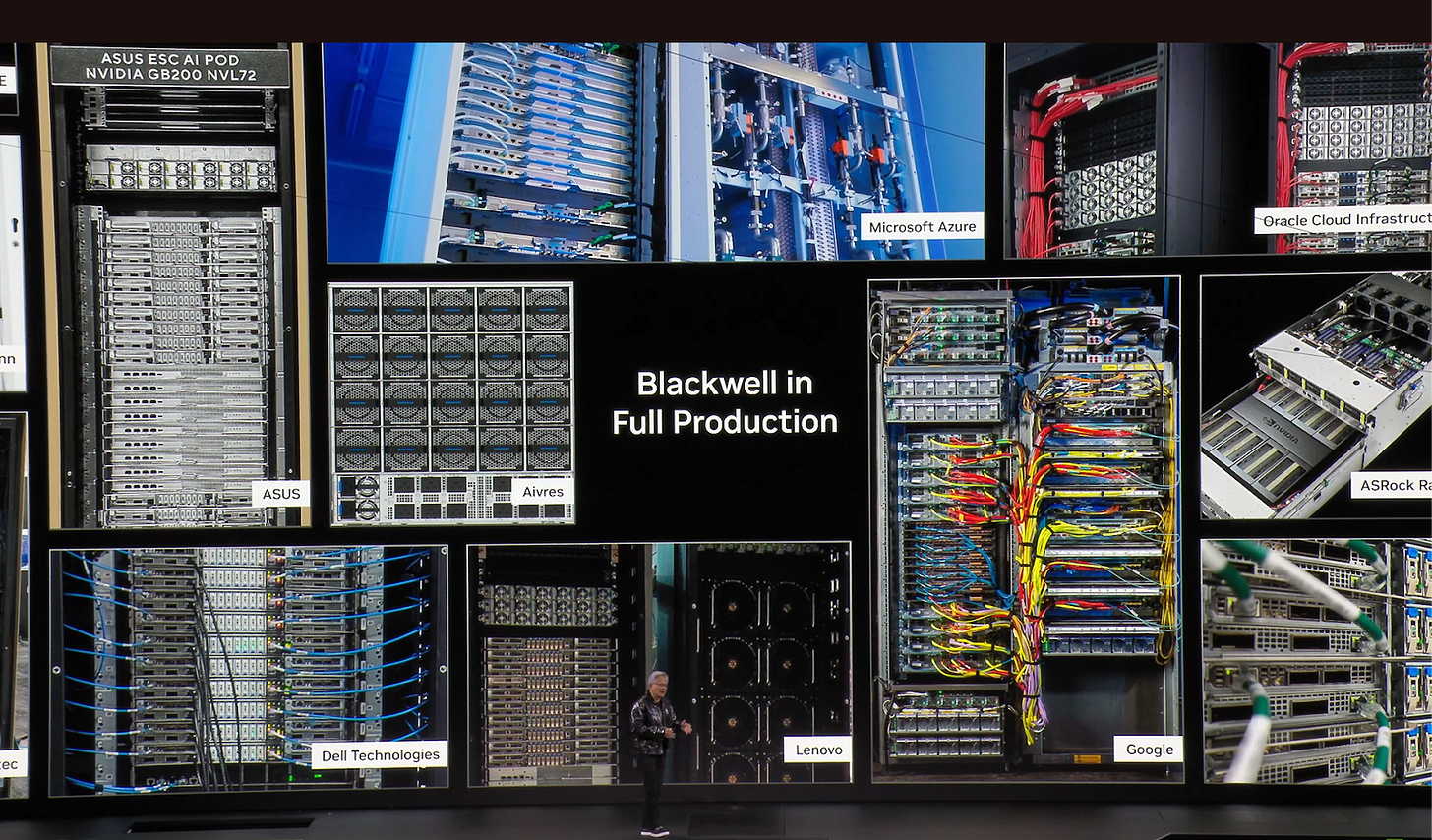

NVIDIA Shares New Products and Advancements at CES 2025

“The IT department of every company is going to be the HR department of AI agents in the future. [...] They will maintain, nurture, onboard, and improve a whole bunch of digital agents and provision them to the companies to use.”

That’s a prediction from NVIDIA CEO Jensen Huang, in his 90-minute keynote speech at the annual Consumer Electronics Show (CES).

Huang also announced several new innovations from NVIDIA.

Arguably most importantly, NVIDIA’s new consumer graphics cards, the RTX 50 series, represent their next generation of chips for gaming and AI processing. The flagship RTX 5090 card can perform quadrillions of AI inference calculations every second; it’s available for $1,999 starting January 30th.

The RTX 50 cards also introduce Deep Learning Super Sampling 4 (DLSS 4), an AI-powered software suite that reduces the work needed to display smooth graphics. For every frame a computer fully renders, DLSS 4 can use AI to generate up to three additional frames, meaning games can run at high frame rates while requiring less work.

For AI enthusiasts, NVIDIA unveiled Project DIGITS, a $3,000 desktop computing unit that can run AI systems with up to 200 billion parameters. Two DIGITS machines can link together to run even larger models.

Huang also highlighted upgrades to NVIDIA’s AI development toolkit:

Various tools to help engineers build and deploy AI models, such as inference microservices, agentic AI blueprints, and Launchables.

The Isaac GR00T blueprint for synthetic motion generation, which records human movements using virtual reality headsets like the Apple Vision Pro, then uses AI to expand that data into larger training datasets for robots.

Cosmos, a set of “world foundation models”—essentially AI systems that generate and simulate physics-based scenarios—for training robots and self-driving cars.

In the automotive world, NVIDIA’s platform for autonomous vehicles, DRIVE Hyperion, passed authoritative industry safety assessments. NVIDIA also announced new partnerships with Toyota, Aurora, and Continental for building driver assistance capabilities.

Overall, NVIDIA continues to enhance its AI infrastructure offerings, laying the foundation for further AI advancements.

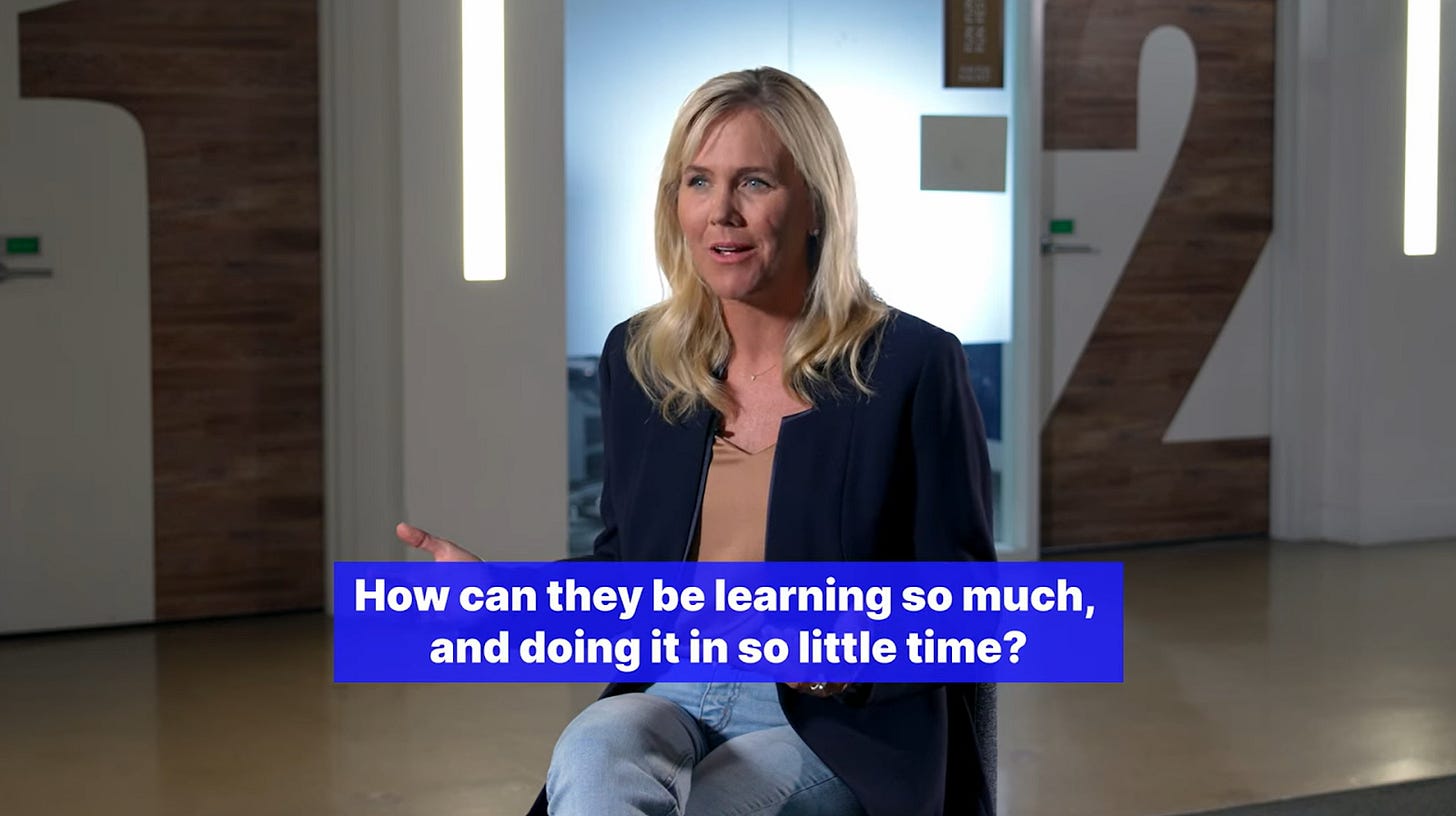

Arizona Greenlights New AI-Powered Online Charter School

Last month, the Arizona State Board for Charter Schools approved an application to launch Unbound Academy, an online-only charter school that plans to open next fall for hundreds of students in grades 4–8.

Unbound Academy uses a proprietary “2 Hour Learning” approach where students complete core academics in just 2 hours per day using AI-powered educational software, including tools from IXL and Khan Academy. The remaining 3–4 daily school hours focus on interactive workshops developing life skills like financial literacy, public speaking, and entrepreneurship.

Instead of traditional teachers, Unbound Academy employs “guides” who serve more as coaches and mentors. These guides help students navigate the AI-powered learning platform, provide targeted support when students struggle, and lead afternoon life skills workshops. However, they still need a teaching certificate or equivalent.

Unbound Academy’s founders have used the 2 Hour Learning model for years at an in-person private school in Austin, Texas called “Alpha School,” which opened in 2016 and currently costs $40,000 per year.

Additional Alpha Schools opened in Brownsville, Texas in 2022—where half the students have parents working at SpaceX—and in Miami, Florida in 2024. This fall, six more Alpha Schools are set to open in Houston, Phoenix, Santa Barbara, West Palm Beach, Tampa, and Orlando.

Critically, these are all physical, brick-and-mortar schools. They also charge pricey tuition fees, whereas Arizona charter schools are tuition-free thanks to taxpayer dollars. It remains to be seen whether the 2 Hour Learning approach can work in a totally virtual format, for students from all economic backgrounds.

EU Releases Second Draft of AI Code of Practice

The European Union (EU) recently published its second draft of the General-Purpose AI Code of Practice, offering detailed guidance for providers of advanced AI systems to comply with the EU AI Act.

The Code serves as a practical roadmap for AI providers, detailing a way to demonstrate compliance with the AI Act’s requirements for general-purpose AI models. It is particularly focused on models that will be released after August 2, 2025, when the AI Act’s rules on general-purpose AI begin to apply.

For general-purpose AI providers, the Code requires:

Technical documentation for authorities (upon request) on topics like energy consumption, training data, hardware usage, parameter counts, and test results.

Information for downstream providers to understand and safely integrate the model, such as “how the model interacts with hardware and software that is external to the model.”

Copyright compliance efforts, like excluding piracy websites from crawling activities, and publicly disclosing all crawlers used for gathering training data.

For providers of models with potential systemic risks, additional obligations include:

Writing and implementing a safety and security framework, including systemic risk tiers, commensurate mitigations, and “best-effort estimates of timelines for when they expect to develop a model that reaches each risk tier.”

Relevant systemic risks include cyber attacks, weapons of mass destruction, large-scale harmful manipulation, large-scale illegal discrimination, and loss of human oversight.

Evaluating models for risks, with techniques that “would be accepted by the majority of AI safety researchers to be amongst the best indicators of a model’s capabilities, propensities, or effects.”

Creating a new model report for each model entering the EU market, including “the results of risk assessment and mitigation […] as well as justifications of development and deployment decisions.”

Implementing security measures suitable for thwarting “highly motivated and well-resourced non-state actors,” in line with RAND’s SL3 benchmark.

Reporting serious incidents such as irreversible critical infrastructure disruptions.

Whistleblower protections for reports on violations of the AI Act, in line with the EU Whistleblower Directive.

A third draft of the Code is expected by February 17, 2025, allowing for further refinement based on ongoing stakeholder feedback.

News at CAIP

We’re excited to welcome the newest member of the CAIP team, Joe Kwon, who is our Technical Policy Analyst. Joe previously worked as a Research Engineer at LG AI Research, and as a Research Associate at MIT’s Computational Cognitive Science Lab.

Kate Forscey wrote a blog post on “The Cost of Congressional Inaction on AI Legislation.”

ICYMI: Jason Green-Lowe wrote an op-ed in The Hill about federal AI legislation: “How Congress dropped the ball on AI safety.”

ICYMI: Ep. 14 of the CAIP Podcast features Anton Korinek, an economics professor at the University of Virginia, discussing AI’s economic and workforce impacts.

Quote of the Week

I thought I had the coolest job ever. I didn’t process the fact that this job is necessary for our entire world to exist as it does.

—Brienna Hall, an ASML engineer who maintains extreme ultraviolet (EUV) lithography machines in Boise, Idaho

This edition was authored by Jakub Kraus.

If you have feedback to share, a story to suggest, or wish to share music recommendations, please drop me a note at jakub@aipolicy.us.

—Jakub