AI Policy Weekly #62

$100B investments, frontier AI frameworks, Workday layoffs

Welcome to AI Policy Weekly, a newsletter from the Center for AI Policy (CAIP). Each issue explores three important developments in AI, curated specifically for U.S. AI policy professionals.

France, EU, Big Tech Spend Hundreds of Billions on AI

With Super Bowl LIX ads costing over $7 million for 30 seconds, OpenAI likely spent at least $14 million on its 60-second commercial in the first half. And that’s just for the time slot—there are many other costs in running a Super Bowl ad.

However, even if all the big game’s AI ads are tallied together, their costs pale in comparison to 2025’s biggest investments in AI.

Let’s start with France. At the recent Paris AI Summit, President Emmanuel Macron announced that over 109 billion euros (114 billion dollars) will go into France’s AI sector over the next few years.

Part of this sum is a €20 billion commitment from Brookfield Asset Management to build AI infrastructure in France.

Even more funding comes from the UAE: Bloomberg reports that “Emirati ruler Mohamed bin Zayed made a commitment to Macron over dinner last week to spend €50 billion.”

Not to be outdone, the European Union made its own announcement at the Paris Summit. A new “InvestAI” initiative will “mobilize €200 billion for investment in AI, including a new European fund of €20 billion for AI gigafactories.”

There will be at least four AI gigafactories, and each one will have 100,000 high-performance AI chips. This adds to Europe’s €10 billion initiative to build a dozen smaller supercomputing centers.

As with France, most of the funding is private. Over 60 European companies joined forces Monday to launch an “EU AI Champions Initiative,” which announced that “more than 20 international key capital allocators have earmarked €150 billion for AI-related opportunities in Europe over the next five years.”

“We want Europe to be one of the leading AI continents,” said European Commission President Ursula von der Leyen. “And this means embracing a way of life where AI is everywhere.”

In the East, the state-owned Bank of China announced an “Action Plan” to support China’s AI industry with no less than 1 trillion yuan (137 billion dollars) over the next five years. The announcement has received surprisingly little coverage in Western media.

All of these investments come on the heels of the United States, where the Trump-backed Stargate project intends to invest $500 billion by 2029 in building U.S. AI infrastructure for OpenAI.

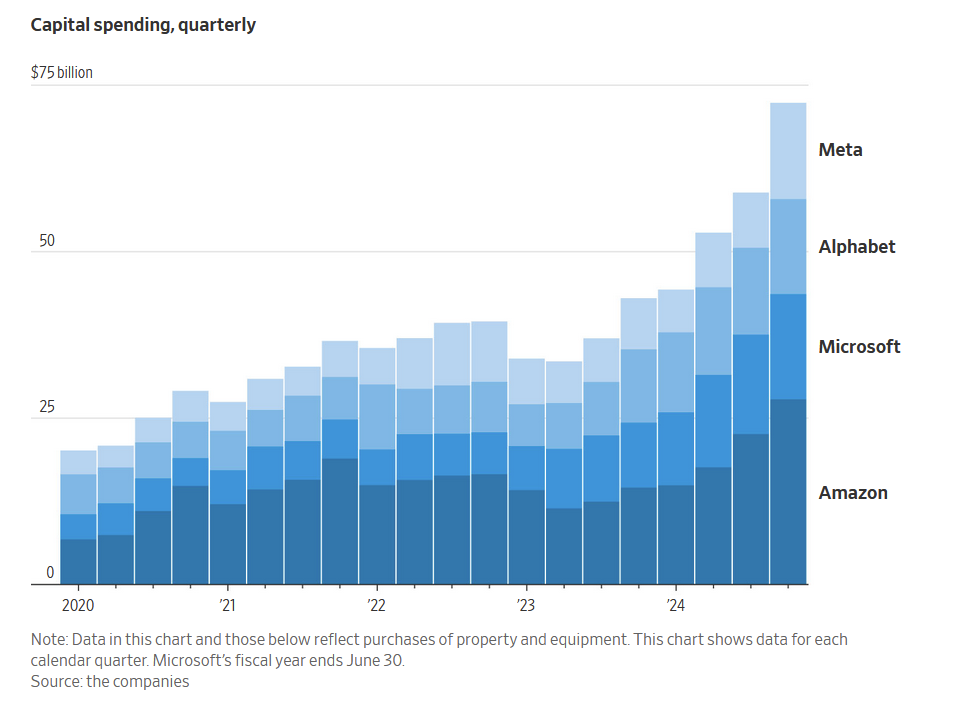

Besides investments from countries, there are massive investments from tech companies:

Meta CEO Mark Zuckerberg said “we’re planning to invest $60-65B in capex this year while also growing our AI teams significantly.”

Google expects its 2025 capital expenditures to reach $75 billion.

Microsoft shared plans to “invest approximately $80 billion to build out AI-enabled datacenters to train AI models and deploy AI and cloud-based applications around the world” in 2025.

Amazon anticipates spending $100 billion on capital expenditures in 2025. According to CEO Andy Jassy, “the vast majority of that capex spend is on AI for AWS.”

In sum, 2025 could see well over $300 billion in AI-related investments from Big Tech.

AI is here to stay, with all its opportunities and risks.

Companies Publish Risk Management Frameworks for Frontier AI

Last year, many leading AI companies made voluntary commitments to publish risk management frameworks addressing possible “severe risks” in future AI systems.

They promised to complete their frameworks by the Paris AI Summit, which ended on Tuesday.

In the week leading up to the summit, frameworks indeed came in from Meta, G42, Cohere, Microsoft, Amazon, and xAI. This adds to earlier frameworks from Anthropic, OpenAI, Google, Magic, and Naver.

Here’s what stands out about each new framework:

Meta identifies outcomes they want to prevent in cyber and bio domains, then works backward to determine if AI models could “uniquely enable” specific threat scenarios.

G42 describes specific controls for three stages of “Deployment Mitigation Levels” and “Security Mitigation Levels.” A final, fourth stage is “to be defined” after models reach the third stage.

Cohere is more business focused, framing everything through the lens of enterprise deployment contexts and customer needs.

Microsoft runs evaluations “during pre-training, after pre-training is complete, and prior to deployment,” to determine whether deeper inspection is necessary. These apply to any model pre-trained with over 10^26 computing operations.

Amazon includes a 3-page appendix describing Amazon’s existing security practices in detail.

xAI names some specific tests it will use, like the Weapons of Mass Destruction Proxy benchmark (WMDP). It also has a section on “loss of control” risks.

These frameworks are a good step. But they vary widely, reflecting uncertainties about how to manage powerful AI models that could arrive quite soon.

“Time is short,” Anthropic CEO Dario Amodei warned in a statement on the Paris Summit.

“Possibly by 2026 or 2027 (and almost certainly no later than 2030), the capabilities of AI systems will be best thought of as akin to an entirely new state populated by highly intelligent people appearing on the global stage—a ‘country of geniuses in a datacenter.’”

So, in the next few years, companies will need to update their frameworks as their understanding of risks evolves rapidly. Currently, there is no requirement for them to do that.

Nor is there any requirement for these frameworks to effectively prevent severe risks.

And even if they do, no authority currently verifies that companies actually follow their own risk management policies.

Further, major AI companies like Mistral and NVIDIA have failed to publish any framework, despite committing to do so by the Paris Summit.

Other companies like “Safe Superintelligence Inc.” (SSI), which is currently in fundraising talks at a reported $20 billion valuation, have made no commitment to publish a framework.

For all these reasons, the Center for AI Policy’s 2025 Action Plan calls for legislation to require all billion-dollar AI companies to meet a minimum standard with their risk management frameworks.

Workday Fires 1,750 People to Meet the AI Moment

“We’re at a pivotal moment,” wrote Workday CEO Carl Eschenbach in a recent email to his employees. Workday’s primary product is a software platform to handle HR, finance, and payroll needs.

“Companies everywhere are reimagining how work gets done, and the increasing demand for AI has the potential to drive a new era of growth for Workday. This creates a massive opportunity for us, but we need to make some changes.”

Eschenbach’s email continued, emphasizing new priorities like “bringing innovations to market faster” and “investing strategically.”

The bottom line: “To help us achieve this, we have made the difficult, but necessary, decision to eliminate approximately 1,750 positions, or 8.5% of our current workforce.”

Departing U.S. employees will receive a minimum of 12 weeks of severance pay, softening some of the blow.

Workday’s strategic shift resembles a move that the file hosting company Dropbox made in April 2023. CEO Drew Houston fired 500 employees—nearly a sixth of the company—in part because “the AI era of computing has finally arrived.”

More recently, Meta has begun the process of laying off thousands of employees. A leaked memo from CEO Mark Zuckerberg indicates that part of the reason is the company’s expanding focus on AI.

These kinds of transitions show that AI is already reshaping the U.S. workforce.

CAIP News

Claudia Wilson, in collaboration with Emmie Hine of Yale’s Digital Ethics Center, published a research report on open source AI and relevant policy proposals.

Ep. 15 of the CAIP Podcast features Bill Drexel, Fellow at the Center for a New American Security (CNAS). Jakub and Bill discuss AI, China, and national security.

Mark Reddish wrote a blog post on the new AI Agent Index and its implications for AI policy.

ICYMI: CAIP recently convened public safety leaders, federal officials, and AI experts to examine AI threats to emergency response.

ICYMI: Jason Green-Lowe wrote a blog post on Super Bowl LIX, AI-powered commercials, and the gradual loss of human control over AI.

Quote of the Week

The vast expanse of the world’s knowledge is now accessible in ways that would have filled past generations with awe. However, to ensure that advancements in knowledge do not become humanly or spiritually barren, one must go beyond the mere accumulation of data and strive to achieve true wisdom.

—the Vatican’s Note on the Relationship Between Artificial Intelligence and Human Intelligence, approved by Pope Francis

This edition was authored by Jakub Kraus.

If you have feedback to share, a story to suggest, or wish to share music recommendations, please drop me a note at jakub@aipolicy.us.

—Jakub