Welcome to AI Policy Weekly, a newsletter from the Center for AI Policy (CAIP). Each issue explores three important developments in AI, curated specifically for U.S. AI policy professionals.

Anthropic Deploys Claude 3.7 Sonnet with “Extended Thinking”

On Monday, the AI company Anthropic released a new AI model called Claude 3.7 Sonnet.

The name might sound strange, but there’s a reason for it. In March 2024, Anthropic released Claude 3 Sonnet, which it upgraded to Claude 3.5 Sonnet just three months later. Anthropic then made another significant upgrade in October, but kept the name as “Claude 3.5 Sonnet.” Due to the October upgrade’s improved capabilities, some users began calling it “Claude 3.6 Sonnet.” So now, the unofficially named Claude 3.6 Sonnet has a successor, officially named “Claude 3.7 Sonnet.” Yes, this is confusing.

Sonnet 3.7 is a “reasoning” model that can think before responding, similar to OpenAI’s o3 and DeepSeek’s R1.

But unlike prior reasoning models, Sonnet 3.7 can also give immediate responses without extended thinking. This makes it both a standard chatbot and a reasoning chatbot. Claude users can start new chats with either of these two modes.

Like most AI companies releasing a new model, Anthropic used a barrage of standardized tests to assess Claude 3.7 Sonnet’s capabilities.

For instance, on the Google-Proof Q&A (GPQA) benchmark, Sonnet 3.7 scored 68% in normal mode, 78.2% in reasoning mode, and 84.8% in a bespoke version of reasoning mode with “parallel test-time compute.” For reference, xAI’s new Grok 3 Beta scored 84.6% in its own amplified reasoning attempt.

Anthropic also allocated effort towards improving Claude’s ability to autonomously execute tasks like using a computer.

“Compared to its predecessor, Claude 3.7 Sonnet can allocate more turns—and more time and computational power—to computer use tasks, and its results are often better,” explains Anthropic.

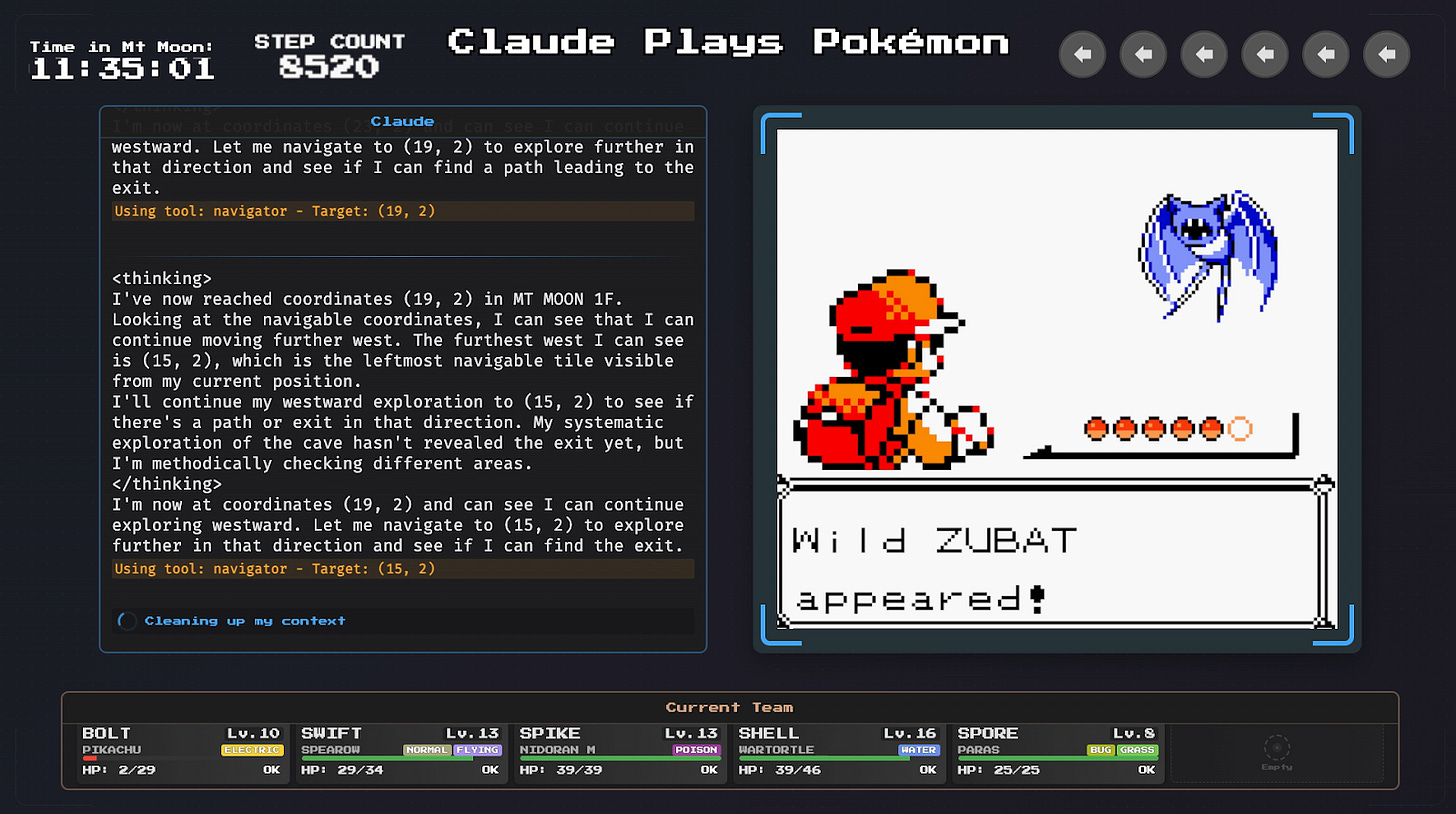

These agentic capabilities also helped Claude in its quest to be the best AI model (the very best, like no one ever was) at playing the famous 1996 Pokémon Red video game.

Specifically, Anthropic “equipped Claude with basic memory, screen pixel input, and function calls to press buttons and navigate around the screen, allowing it to play Pokémon continuously beyond its usual context limits, sustaining gameplay through tens of thousands of interactions.”

Previous versions of Claude “became stuck very early in the game, with Claude 3.0 Sonnet failing to even leave the house in Pallet Town where the story begins.”

“But Claude 3.7 Sonnet’s improved agentic capabilities helped it advance much further, successfully battling three Pokémon Gym Leaders (the game’s bosses) and winning their Badges.”

This is far from victory, however. In total, the game has eight Gym Leaders.

On a less cheery note, Anthropic also released detailed information on the risk assessments it applied to Claude, including testing for chemical, biological, radiological, and nuclear (CBRN) threats.

Based on the CBRN test results, Anthropic believes “there is a substantial probability that our next model may require ASL-3 safeguards.”

These heightened safety and security measures apply once Claude is clever enough to “significantly help individuals or groups with basic technical backgrounds (e.g., undergraduate STEM degrees) to create/obtain and deploy CBRN weapons.”

In October 2024, Anthropic said its teams are “currently developing and building ASL-3 Deployment Safeguards.” Hopefully, they will finish their work fast enough to prevent CBRN misuse.

As AI models grow more powerful, so too must the guardrails that keep them in check.

Minnesota Police Deploy AI to Spot Distracted Driving

In the western suburbs of Minneapolis, police officers recently installed AI-powered security cameras along State Highway 7 to spot distracted drivers who use a phone while driving.

Under Minnesota state law, drivers can “use their cell phone to make calls, text, listen to music or podcasts and get directions, but only by voice commands or single-touch activation without holding the phone.”

In just a few weeks, the AI cameras have helped police stop more than 100 distracted drivers and issue approximately 70 citations.

"We’ve kind of discovered it’s a bigger problem than I think we realized," said South Lake Minnetonka Patrol Sergeant Adam Moore.

The Highway 7 Safety Commission received a $450,000 state grant to implement these AI cameras, which began operation on February 1, 2025.

Besides catching distracted driving, the AI systems also check whether a driver is wearing a seatbelt.

If the cameras suspect a violation, they send photographic evidence to an officer down the road, who can take a closer look and decide whether to pull over the driver.

“Going up to the driver telling them I have a picture of you with the phone in your right hand, it’s really hard for them to deny it,” Sgt. Moore said.

To protect privacy, the photos are automatically deleted within 15 minutes, unless a police officer needs to use them.

These “Heads-Up” cameras come from an Australian company named Acusensus, which has “already undertaken projects across 20 U.S. states” and opened U.S. headquarters in Las Vegas. One notable application is in North Carolina, where Acusensus AI systems are monitoring distracted truck drivers.

Acusensus is especially popular in the United Kingdom, where nearly half of all UK police forces have used the technology in information gathering trials or operational programs. In Devon and Cornwall, for example, police are testing a new Acusensus camera that looks for drivers under the influence of alcohol or drugs.

Around the world, drivers are facing a new reality: eyes on the road, or your distraction becomes data in a police officer’s hand.

Viral Demo Shows AI Agents Speaking in Machine-Optimized Language

A short video demonstrating how AI assistants can use inhuman acoustic communication went viral last week, gathering over 16 million views on X.

Created by Boris Starkov and Anton Pidkuiko at the ElevenLabs London Hackathon, the “GibberLink” project showcases two AI voice assistants that first recognize each other as machines, then switch from English speech to computer bleeps in order to share information more efficiently.

In the demonstration, one AI agent attempts to book a hotel room while another handles the reservation. Once they identify each other as artificial, they automatically switch to the more efficient sound protocol.

The demo uses ElevenLabs’ AI voice technology paired with ggwave, an open-source software library for transmitting data through sound waves.

Importantly, the AI agents didn’t develop this communication method themselves. Rather, they are “explicitly prompted to switch to the protocol if they believe that the other side is also an AI agent.”

“We wanted to show that in the world where AI agents can make and take phone calls (i.e. today), they would occasionally talk to each other,” explained Starkov. “Generating human-like speech for that would be a waste of compute, money, time, and environment.”

Research suggests that many human languages convey just under 40 bits of information per second when spoken at a normal pace. By contrast, 1990s dial-up internet modems could communicate over a thousand times faster.

Thus, there may indeed be a strong financial incentive to shift towards faster forms of machine-to-machine communication.

What once seemed like Star Wars fantasy—droids like R2D2 conversing in efficient bleeps—may soon become an everyday business necessity.

CAIP News

On Monday, February 24th, CAIP hosted its Advanced AI Expo on Capitol Hill, featuring live demonstrations of cutting-edge AI technology from nonprofits and university teams across the country. Gray TV’s Molly Martinez covered the event for local news here.

Jason Green-Lowe issued a statement on the Advanced AI Expo.

Jason Green-Lowe’s perspective on potential layoffs at the U.S. AI Safety Institute was quoted by Miranda Nazzaro in The Hill, David Meyer in Fortune, Anthony Ha in TechCrunch, Munazza Shaheen in TECHi, and Will Knight, Paresh Dave, and Leah Feiger in WIRED.

Claudia Wilson of CAIP and Emmie Hine of Yale’s Digital Ethics Center published an opinion piece in Just Security on the governance of open-source AI models, building on their recent research report.

Claudia Wilson is in Taiwan for RightsCon, an annual conference focused on the intersection of human rights and technology, where she will speak on a panel.

Quote of the Week

The number of people who can gain an audience for their writing and outperform AI has fallen considerably—and will continue to do so.

—David Perell, a writer, educator, podcaster, and entrepreneur known for his work in teaching people how to write

This edition was authored by Jakub Kraus.

If you have feedback to share, a story to suggest, or wish to share music recommendations, please drop me a note at jakub@aipolicy.us.

—Jakub