AI Policy Weekly #68

Emotions, Ghiblification, Gemini 2.5

Welcome to AI Policy Weekly, a newsletter from the Center for AI Policy (CAIP). Each issue explores three important developments in AI, curated specifically for U.S. AI policy professionals.

Heaviest ChatGPT Users Show Signs of Emotional Dependence

Last week, researchers from OpenAI and MIT Media Lab shared results from two complementary studies examining how ChatGPT usage affects emotional well-being and user behavior.

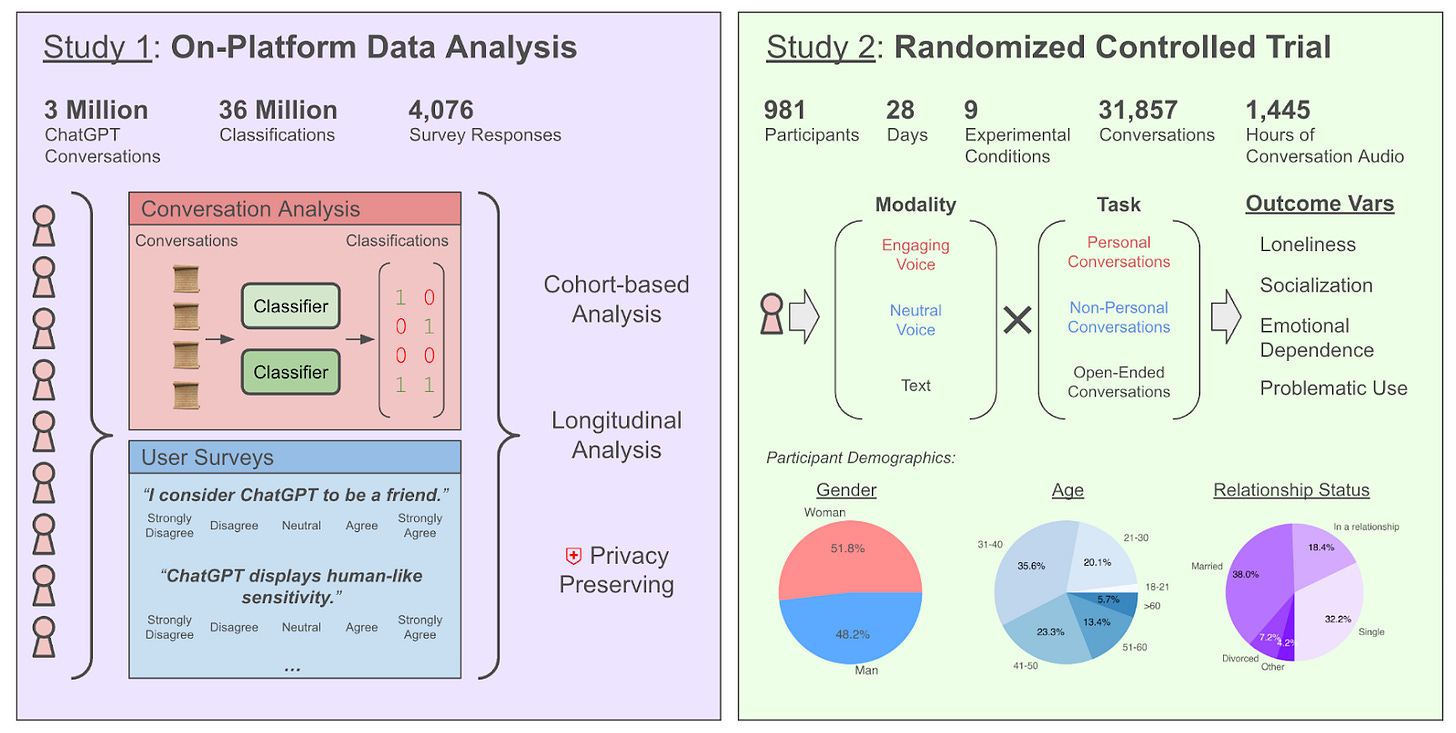

The first study analyzed real-world platform data: 3 million ChatGPT conversations, surveys from 4,076 users, and longitudinal tracking of approximately 6,000 heavy users.

In the platform analysis, researchers found that “a small number of users are responsible for a disproportionate share of affective use of models.” The top 10% of users “regularly” triggered classifiers detecting emotional engagement, reaching “past 50% of conversations or higher for a small number of users.”

“Power users were slightly more likely than control users to consider ChatGPT a ‘friend’ and to find it more comfortable than face-to-face interactions, though these views remain a minority in both groups.”

The platform data also found voice-based interactions exhibiting specific emotional markers up to 10 times more frequently than text-based chats.

The second study was a 28-day randomized controlled trial (RCT) with 981 participants assigned to nine different experimental conditions: three interaction modes (engaging voice, neutral voice, text) linked with three daily conversation prompt types (personal, non-personal, open-ended).

Similar to the platform data, the RCT found that “higher daily usage—across all modalities and conversation types—correlated with higher loneliness, dependence, and problematic use, and lower socialization.”

“Exploratory analyses revealed that those with stronger emotional attachment tendencies and higher trust in the AI chatbot tended to experience greater loneliness and emotional dependence, respectively,” says the RCT paper.

However, unlike the platform study, the RCT found no significant difference in emotional engagement between voice and text modes when users were randomly assigned. “This suggests that users who are seeking affective engagement self-select into using voice [instead of text],” the researchers explained.

ChatGPT reaches 400 million weekly active users, so findings about even a small subset of users could affect millions of people.

These studies have limitations. For example, the RCT made all participants use ChatGPT in some way—there was no “ChatGPT-free” control group. And for the platform analysis, certain kinds of people were probably more inclined to fill out the surveys.

Additionally, nearly 70% of randomized trial participants had never used voice mode before, indicating that the research captures early adoption patterns rather than established usage habits.

Moreover, human-AI interactions could change even further as AI incorporates more human-like elements through images, videos, and physical embodiment.

The precise effects of chatbot usage on people’s psychological well-being remain unclear. These OpenAI-MIT studies do not prove that intense chatbot usage is causing emotional dependency; they primarily identify correlations. Further, ChatGPT is only one particular platform from one particular company; other services are more explicitly designed to be “AI companions.”

The authors argue that further research should study effects of longer-term exposure to AI models.

Unfortunately, there might not be much time to conduct that research. AI systems are rapidly improving and spreading to new users. And with AI usage becoming increasingly relevant for career success, societal dependencies are forming that may be difficult to reverse.

OpenAI’s New Image Generator Overcomes Previous AI Art Limitations

In June 2022, the popular online blogger Scott Alexander pondered how contemporary AI art generators like DALL-E 2 struggled to generate unconventional stained glass window designs.

“I’m not going to make the mistake of saying these problems are inherent to AI art,” wrote Alexander. “These are the sorts of problems I expect to go away with a few months of future research.”

One reader disagreed and challenged Alexander to a bet that these problems would persist for the foreseeable future. The bet named five concrete images that AI would be unable to generate for at least three years, such as “pixel art of a farmer in a cathedral holding a red basketball.”

Now, less than three years later, OpenAI’s latest image generator can clearly handle all five of these tasks. AI capabilities that appeared impossible in 2022 are now available to millions of people.

There’s one caveat to the system’s success. Though it can easily respond to the aforementioned prompt by generating pixel art of a farmer in a cathedral holding a basketball, it sometimes generates a standard orange basketball rather than a red one.

This represents a remaining limitation of current AI image generators: they struggle to break away from dominant patterns in their training data.

Nonetheless, OpenAI’s new generator is making notable progress in this respect. One particularly striking example is its ability to depict a completely full glass of wine—something previous AI models often failed to do, since wine glasses are rarely filled to the brim in photos.

The image generator, which is part of OpenAI’s GPT-4o model, has overcome other significant hurdles as well, such as the ability to display legible text in images. Though it still struggles in small ways, 4o can produce everything from restaurant menus to comic strips. Previous AI systems struggled immensely with this task.

Human hands and fingers presented another persistent challenge for AI art generators. Earlier models frequently produced telltale distortions—too many fingers, misshapen joints, or anatomically impossible arrangements. Again, the 4o model significantly reduces these issues.

While fixing these weaknesses, GPT-4o also enhances AI image generators’ existing strengths. For example, the longstanding ability to transform images into different artistic styles (“style transfer”) has improved markedly.

This advancement sparked a viral “Ghiblification” trend on X, where many users are converting photos and memes into the style of animated movies from Studio Ghibli, a renowned Japanese animation studio. Though Ghiblifiers are having lots of fun, their posts are also reigniting concerns about AI and copyright infringement.

For policymakers, one key takeaway is that AI’s current capabilities are current capabilities. Future AI systems may have abilities that surprise us.

Gemini 2.5 Pro Pushes AI Capabilities Forward

Google has released an experimental version of Gemini 2.5 Pro, its latest broadly capable AI model.

As with previous Gemini models, 2.5 Pro can process up to 1 million tokens at once (approximately 750,000 words). That’s enough to comfortably ingest the entire Lord of the Rings trilogy and still have plenty of room to answer questions about the books.

This lengthy “context window” dwarfs competitors, which typically manage less than a quarter of Gemini’s capacity.

Further, Google wrote that a 2 million token context window is “coming soon.” That would nearly be enough to process all five existing Game of Thrones books in one prompt.

Another important feature is that Gemini 2.5 Pro incorporates reasoning capabilities, meaning it can “think” before responding. Its predecessors, 1.5 Pro and 2.0 Pro, lacked this ability.

Gemini 2.5 Pro scored 18.8% on Humanity’s Last Exam, a collection of 3,000 extremely challenging questions that stumped previous AI models. It also achieved 84% on Google-Proof Q&A (GPQA), a challenging science benchmark where top models scored below 40% in fall 2023.

Overall, there’s a case to be made that Gemini 2.5 Pro is the best all-around reasoning machine in the world.

But that claim is certainly debatable. OpenAI, Anthropic, and xAI also offer reasoning models with similar performance metrics, with DeepSeek R1 lagging slightly behind.

Importantly, this competitive landscape does not mean that AI progress is plateauing. Quite the opposite—with GPQA scores rocketing from 40% to 84% in under two years, the new reasoning systems are all breaking records simultaneously.

CAIP News

On Tuesday, CAIP hosted a panel discussion on AI and Cybersecurity: Offense, Defense, and Congressional Priorities. Stay tuned for a video recording and our forthcoming research report on cybersecurity.

The University of Louisville’s College of Business mentioned CAIP in a profile of Ben Shar, a student who presented at CAIP’s recent advanced AI exhibition. Notre Dame’s Lucy Family Institute for Data & Society and University of Wisconsin–Madison’s School of Computer, Data & Information Sciences also discussed their students’ presentations.

The American Action Forum, International Association of Privacy Professionals, Government Technology, and New York Times mentioned CAIP’s recommendations for the White House AI Action Plan.

ICYMI: CAIP shared suggestions with the U.S. Office of Management and Budget (OMB) regarding the revision of OMB Memorandum M-24-18.

From the archives… As the 2025 Major League Baseball season begins, revisit Iván Torres’ blog post about AI-powered online sports betting, written shortly after the LA Dodgers’ World Series victory last fall.

Quote of the Week

AI reads us. Now it’s time for us to read AI.

—Jeanette Winterson, an award-winning British novelist, on humans reading AI-generated literature

This edition was authored by Jakub Kraus.

If you have feedback to share, a story to suggest, or wish to share music recommendations, please drop me a note at jakub@aipolicy.us.

—Jakub