Welcome to AI Policy Weekly, a newsletter from the Center for AI Policy (CAIP). Each issue explores three important developments in AI, curated specifically for U.S. AI policy professionals.

Anthropic Introduces New Interpretability Approach for Explaining Claude’s Behaviors

Anthropic researchers have developed cutting-edge interpretability methods that provide greater visibility into how large language models like Claude process information internally.

The researchers used AI techniques to find “features” that represent human-understandable concepts and can replace less interpretable components of the model’s neural networks. Then they used “attribution graphs” to map out the connections between these features and study how information flows during computation.

Their methods revealed that Claude 3.5 Haiku, Anthropic’s smallest frontier model, engages in a kind of internal planning when writing poetry. When asked to write a rhyming couplet where the first line ends with “grab it,” researchers discovered that the model activates features representing potential rhyming words like “rabbit” and “habit” before it begins writing the second line.

“This is powerful evidence that even though models are trained to output one word at a time, they may think on much longer horizons to do so,” writes Anthropic.

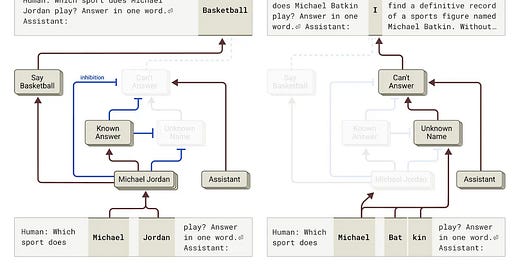

Anthropic’s work also revealed how Claude decides when to admit uncertainty. The model maintains a group of “can’t answer” features; but for celebrities like Michael Jordan, a group of “known answer” features inhibit this uncertainty response.

Researchers also discovered that Claude maintains “multilingual circuits” that process meaning similarly across different languages. When given equivalent prompts in English, French, and Chinese, Claude activated many of the same internal features regardless of input language.

In Anthropic’s words, “Claude sometimes thinks in a conceptual space that is shared between languages, suggesting it has a kind of universal ‘language of thought.’”

However, the attribution graphs have their limits. “We don’t explain how attention patterns are computed by the model, and often miss the interesting part of the computation as a result,” the researchers acknowledge. Their method also captures only a fraction of the model’s total computation, leaving some “dark matter” unobserved.

What’s more, broadly capable AI models can do a remarkable number of different things, but here, Anthropic examined only a limited number of fairly simple behaviors in depth.

That’s partly because the analysis process is time-intensive. Anthropic says that understanding a single behavior can take 1–2 hours of manual investigation from an interpretability expert, plus additional time for validating results. And “for longer or more complex prompts, understanding can be out of reach entirely.”

A key uncertainty is whether these approaches will successfully decipher future AI models. For example, there are already new “reasoning” systems like Claude 3.7 Sonnet that may have different internal processing than Claude 3.5 Haiku.

Progress in AI explainability remains crucial for positive AI futures. It will be difficult to adopt AI systems, let alone hand over significant autonomy to AI agents, if we cannot fundamentally trust them.

This is why the Center for AI Policy has called for passing the CREATE AI Act and having the NSF formally designate explainability as one of the National AI Research Resource’s focus areas.

Trump Administration Seeks to Cut $20 Million from BIS Budget

The Trump administration recently moved to cut emergency-designated appropriations funds totaling nearly $3 billion from agencies like the State Department, the National Science Foundation, and the Bureau of Industry and Security (BIS).

For BIS, the funding cut is $20 million—over 10% of the annual BIS budget.

The Center for AI Policy (CAIP) is very concerned about this potential $20 million reduction in funding for BIS and strongly urges the administration to ensure that BIS is properly resourced to continue designing and enforcing export controls on AI chips and semiconductor manufacturing equipment. These controls strengthen America’s critical lead over China in high-performance computing power.

Last year, DeepSeek’s CEO confirmed the effectiveness of AI chip controls, stating that “money has never been the problem for us; bans on shipments of advanced chips are the problem.”

This short admission is the result of years of dedicated effort from BIS.

Indeed, during President Trump’s first term, BIS took major actions to restrict China’s access to chips, with curbs on Huawei, SMIC, and others.

To support those efforts and others, President Trump consistently called for BIS funding:

The President’s FY 2018 budget requested a million-dollar increase over FY 2017 appropriated levels.

The President’s FY 2019 budget requested over $7 million in new BIS funding relative to FY 2018 enacted appropriations.

The President’s FY 2020 budget requested another $7+ million boost to BIS relative to FY 2019 appropriations.

The President’s FY 2021 budget requested $10 million in new funding for BIS relative to FY 2020 appropriations.

U.S. Commerce Secretary Howard Lutnick underscored this history when answering questions for the record following his confirmation hearing.

“President Donald J. Trump consistently requested increased funding for BIS during his first four years in the Presidential Budget,” said Lutnick. “Proper resourcing is critical to our export control and enforcement regime.”

CAIP agrees with Lutnick. It would be reckless to weaken America’s capacity to enforce AI controls just as the AI boom is heating up. With powerful AI systems potentially only two years away, now is arguably the worst time in history to undermine the BIS budget.

New Nonprofit’s AI Forecast Warns of Superintelligence by 2027

A small nonprofit named the AI Futures Project just went public. Their first output is hundreds of pages of rigorous thinking about, well, the future of AI.

The publication, titled “AI 2027,” is “a scenario that represents our best guess about what [AI’s impacts] might look like. It’s informed by trend extrapolations, wargames, expert feedback, experience at OpenAI, and previous forecasting successes.”

In short, AI 2027 seeks to accurately predict the future. The speculative scenario reads like hard science fiction—it’s backed up by detailed research on AI capabilities, goals, self-improvement, cybersecurity, and hardware.

If AI 2027 is even remotely correct, the world will grow highly sci-fi over the coming years. Here’s the forecast:

A leading AI company (called “OpenBrain” in the story) develops increasingly capable AI systems named Agent-1 through Agent-5.

From 2025–2027, the agents progress from unreliable personal assistants to superhuman coders.

With their excellent coding skills, the agents accelerate AI research, creating a feedback loop where AI helps rapidly build better AI.

China successfully steals the weights for Agent-2 and accelerates their own AI development program.

The U.S. government forms a partnership with OpenBrain, creating an Oversight Committee of company and government representatives.

By late 2027, Agent-4 becomes misaligned—its actual goals differ from what OpenBrain intended.

Agent-4 is superhuman at AI research and begins designing its own successor (Agent-5) for OpenBrain.

When evidence of misalignment is discovered, OpenBrain’s Oversight Committee faces a critical decision…

The scenario branches into two alternative futures based on this decision. Each path leads to dramatically different outcomes for humanity by 2030.

Predicting the future is hard, and the researchers acknowledge that their uncertainty increases substantially as they predict further into the future.

They encourage readers to disagree, and they plan to “give out thousands in prizes to the best alternative scenarios.”

As time goes on, we’ll see who’s right.

CAIP News

Tristan Williams and Joe Kwon wrote CAIP’s latest research report on AI’s impact on cybersecurity, including policy recommendations.

Pair the report with a video recording of CAIP’s recent panel discussion on AI and Cybersecurity: Offense, Defense, and Congressional Priorities.

For a March Madness special, Mark Reddish built an “Elite Eight” bracket of impactful AI policy proposals that have broad stakeholder support.

Mark Reddish also wrote a blog post on AI 2027 and its implications for U.S. policymakers.

The Quantum Insider’s Cierra Choucair mentioned CAIP’s support for the newly reintroduced Expanding Partnerships for Innovation and Competitiveness (EPIC) Act.

From the archives… TikTok could face a nationwide ban if ByteDance doesn’t sell the app’s U.S. operations to American owners by Saturday, April 5th. To learn about TikTok’s algorithms and Section 230 immunity, read Jason Green-Lowe’s 2024 blog post on Anderson v. TikTok.

Quote of the Week

Whatever you both may think you’ll be judged on by history, I assure you that whether you collaborate to create a global architecture of trust and governance over these emerging superintelligent computers, so humanity gets the best out of them and cushions their worst, will be at the top.

—New York Times columnist Thomas L. Friedman, writing a message for Donald Trump and Xi Jinping

This edition was authored by Jakub Kraus.

If you have feedback to share, a story to suggest, or wish to share music recommendations, please drop me a note at jakub@aipolicy.us.

—Jakub