Welcome to AI Policy Weekly, a newsletter from the Center for AI Policy (CAIP). Each issue explores three important developments in AI, curated specifically for U.S. AI policy professionals.

We’re seeking feedback on AI Policy Weekly! To help shape the future of this newsletter and make it more valuable for you, please consider taking a minute to share your perspective here.

White House Issues New Memoranda on Government AI Adoption

The White House Office of Management and Budget (OMB) recently issued two memoranda providing guidance and rules on how executive branch agencies should use and procure AI systems.

President Trump’s January executive order on AI directed the creation of these documents, which replace policies issued during the Biden administration.

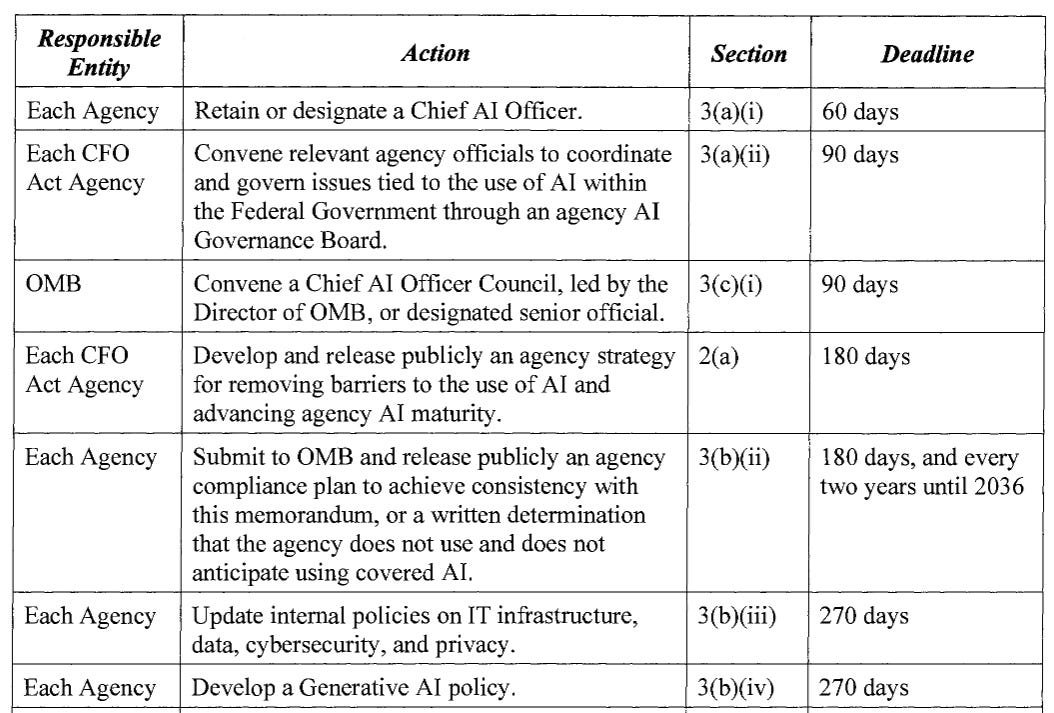

M-25-21, “Accelerating Federal Use of AI,” requires all agencies to designate or retain a Chief AI Officer (CAIO). These officials will “promote agency-wide responsible AI innovation,” advise agency leadership, and more.

The memorandum also directs each CFO Act agency to establish an AI Governance Board within 90 days to “coordinate and govern” the agency’s AI activities.

Further, CFO Act agencies must develop “AI Strategies” within 180 days. These are formal documents that outline an agency’s plan for AI adoption, including technical infrastructure needs, data governance approaches, and workforce development plans. These strategies must be published on agency websites.

M-25-21 also identifies fifteen AI use cases presumed to be “high-impact,” such as “transport, safety, design, development, or use of hazardous chemicals or biological agents.”

For AI applications in these categories, agencies must implement risk management practices within 365 days. Specific mitigations include pre-deployment testing, impact assessments, ongoing performance monitoring, and human oversight. Agencies also need to “provide an option for end users and the public to submit feedback on the use case.”

Regarding transparency, M-25-21 mandates that agencies “release and maintain AI code as open source software in a public repository,” with some exceptions for security and intellectual property concerns.

M-25-22, the procurement memorandum, is about half as long as M-25-21. It provides specific requirements for how federal agencies can purchase AI systems and services.

When evaluating AI products under M-25-22, agencies “must, to the greatest extent practicable, test proposed solutions to understand the capabilities and limitations.”

M-25-22 also establishes specific contract requirements for AI procurement. Contracts must provide agencies the ability to “regularly monitor and evaluate [...] performance, risks, and effectiveness” of AI systems.

Additionally, within 200 days, GSA will create a web-based repository for agencies to share AI procurement resources.

Both M-25-21 and M-25-22 explicitly exclude AI components of National Security Systems—information systems involving intelligence, cryptologic, military, or related activities.

These memoranda establish the framework for how federal agencies will implement, govern, and purchase AI technologies. This is highly consequential as AI systems become increasingly central to government operations.

Google DeepMind Outlines Technical Approach to AGI Safety

Google DeepMind recently published a 145-page paper outlining their approach to mitigating severe harms from artificial general intelligence (AGI).

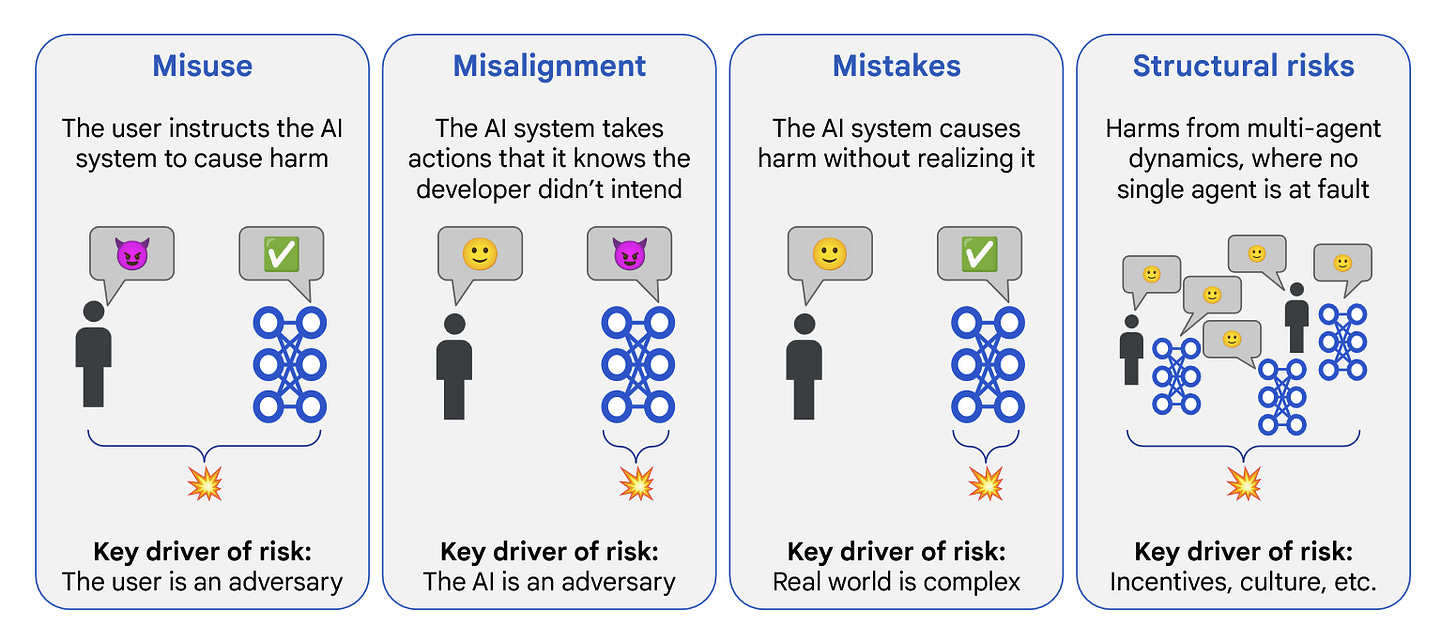

The paper, titled “An Approach to Technical AGI Safety and Security,” presents technical strategies for addressing risks from both misuse and misalignment of increasingly powerful AI systems.

DeepMind defines AGI as a (future) AI system that “matches or exceeds that of the 99th percentile of skilled adults on a wide range of non-physical tasks.”

On timelines, the authors state: “We are highly uncertain about the timelines until powerful AI systems are developed, but crucially, we find it plausible that they will be developed by 2030.”

Their strategy is built on several key assumptions, such as the “no human ceiling” assumption: “AI capabilities will not cease to advance once they achieve parity with the most capable humans.”

DeepMind also believes that future AI system’s contributions to AI research might “drastically increase the pace of progress, giving us very little calendar time in which to notice and react to issues that come up.”

To reduce misuse risks, DeepMind proposes a strategy involving capability evaluations, cybersecurity measures, and deployment mitigations that prevent bad actors from accessing harmful capabilities.

For misalignment, where AI systems might knowingly cause harm against developers’ intentions, they advocate for improving oversight, using interpretability techniques, making safety cases, and more.

Though the paper is long and detailed, the authors emphasize that “this is a roadmap rather than a solution, as there remain many open research problems to address.”

Meta’s Llama 4 AI Models Arrive to Mixed Reviews

Meta released its new generation of AI models, Llama 4, on Saturday, publishing the model weights for two models named Scout and Maverick. A third model, Behemoth, remains in training, and a new reasoning model is also in the works.

Both Scout and Maverick employ a “mixture-of-experts” design, which means they activate small subsets of their weights selectively and dynamically in response to inputs. Scout, the smaller of the two, has 109 billion total parameters, whereas Maverick has 400 billion.

Scout claims an impressive 10-million-token context window (over 7 million words), but Meta only trained it directly on significantly shorter contexts, leaving some analysts skeptical about real-world utility.

Meta’s official announcement showcased impressive benchmark scores, presenting Maverick as competitive against top non-reasoning models like GPT-4o and DeepSeek-V3.

However, independent evaluations have cast doubt on Llama 4’s abilities. Tests such as ARC-AGI, Fiction.LiveBench, SimpleBench, and Aider Polyglot showed other leading models outperforming Maverick and Scout.

One particularly sharp allegation suggested Meta trained Llama 4 directly on certain test answers, artificially inflating results. Meta VP Ahmad Al-Dahle responded clearly: “That’s simply not true and we would never do that.”

Prominent AI commentator Zvi Mowshowitz summarized the situation, stating that overall, Llama 4 inspired “the most negative reaction I have seen to a model release.”

Many will now be closely watching Meta’s forthcoming Behemoth and reasoning model to see how the company’s AI capabilities evolve.

CAIP News

The latest episode of the CAIP Podcast features Gabe Alfour, CTO and co-founder of Conjecture. Gabe and Jakub discuss superintelligence, AI-accelerated science, the limits of technology, different perspectives on the future of AI, loss of control risks, AI racing, international treaties, and more.

ICYMI: Mark Reddish built an “Elite Eight” bracket of impactful AI policy proposals that have broad stakeholder support.

ICYMI: Tristan Williams and Joe Kwon wrote CAIP’s latest research report on AI’s impact on cybersecurity, including policy recommendations.

From the archives… The famous Coachella music festival starts tomorrow. For analysis of AI’s intersection with music and copyright, revisit Tristan Williams’ December op-ed in Tech Policy Press: “Beyond Fair Use: Better Paths Forward for Artists in the AI Era.”

Quote of the Week

Not for most things.

—Microsoft co-founder Bill Gates’ response to the question “Will we still need humans?”

This edition was authored by Jakub Kraus.

If you have feedback to share, a story to suggest, or wish to share music recommendations, please drop me a note at jakub@aipolicy.us.

—Jakub