Welcome to AI Policy Weekly, a newsletter from the Center for AI Policy (CAIP). Each issue explores three important developments in AI, curated specifically for U.S. AI policy professionals.

Congress Passes the Take It Down Act

On April 28th, the U.S. House passed the Tools to Address Known Exploitation by Immobilizing Technological Deepfakes on Websites and Networks (TAKE IT DOWN) Act with a 409–2 vote.

Since the bill previously passed the Senate in February by unanimous consent, the next step is for President Trump to sign the bill into law.

He’s almost certain to do so, since he spoke in favor of the bill during his joint address to Congress in March, stating “I look forward to signing that bill into law.” Furthermore, First Lady Melania Trump has been a strong supporter of the bill.

Senator Ted Cruz (R-TX) originally introduced the Take It Down Act in June 2024 during the previous session of Congress, then reintroduced it in January 2025 for the current session. Senator Amy Klobuchar (D-MN), Representative Maria Elvira Salazar (R-FL), and Representative Madeleine Dean (D-PA) have also championed the legislation.

The Take It Down Act seeks to curb the publication of nonconsensual intimate imagery (NCII) online, including AI-generated NCII.

It introduces the term “digital forgery,” defined as an “intimate visual depiction of an identifiable individual created through the use of software, machine learning, artificial intelligence, or any other computer-generated or technological means [...] that, when viewed as a whole by a reasonable person, is indistinguishable from an authentic visual depiction of the individual.”

“Intimate visual depiction” is an established term for sexually explicit imagery from the Violence Against Women Act (VAWA)’s 2022 reauthorization.

According to the Justice Department, existing law already “lets you bring a civil action in federal court against someone who shared intimate images, explicit pictures, recorded videos, or other depictions of you without your consent.”

The Take It Down Act goes further by creating criminal liability for people who knowingly publish digital forgeries or real intimate imagery to an online platform—with some exceptions.

Criminal penalties also apply to people who threaten to publish such images “for the purpose of intimidation, coercion, extortion, or to create mental distress.”

Furthermore, the Take It Down Act directs every covered platform to create a process whereby victims of NCII on the platform can ask the platform to remove that content (i.e., take it down). Platforms must comply with valid takedown requests within 48 hours, and they must “make reasonable efforts to identify and remove any known identical copies of such depiction.”

The Take It Down Act represents a significant step from Congress towards addressing the harms of deepfakes.

AI Experts From Biden’s Talent Surge Leave Federal Government

Six days before the 2024 election, the Biden administration announced that it had hired over 250 AI practitioners through its “AI Talent Surge”—a program launched in late 2023 through Biden’s AI executive order.

These technically-savvy federal employees worked on tasks like “informing efforts to use AI for permitting, advising on AI investments across the federal government, and writing policy for the use of AI in government.”

According to recent reporting from Time, most of these experts are gone. It’s not entirely clear why, but Time heard from “multiple federal officials” that the employees were “quickly pushed out by the new administration.”

Time writes that many of the cuts came when Elon Musk’s Department of Government Efficiency (DOGE) “fired hundreds of recent technology hires as part of its broader termination of thousands of employees on probation or so-called ‘term’ hires.” Others occurred through workforce reductions at the U.S. Digital Service and the 18F technology office.

Angelica Quirarte, who helped recruit approximately 250 AI specialists for Biden’s AI Talent Surge, told Time that “about 10%” of those experts remain in federal service. Quirarte resigned 23 days into the Trump administration.

Thus, it seems that over 200 AI specialists have recently left the government.

The Center for AI Policy thinks government AI expertise is essential. It enables both government modernization and informed preparation for future AI systems that could fundamentally transform national security, global stability, and everyday life.

We strongly urge the Trump administration to prioritize building its AI capacity and growing it to be even greater than its previous levels. We were pleased to see OMB Memorandum M-25-21’s guidance that “agencies are strongly encouraged to prioritize recruiting, developing, and retaining technical talent in AI roles,” and we urge those efforts to continue with full strength.

White House Publishes 10,000+ Comments on Upcoming AI Action Plan

AI Policy Weekly #67 compiled many of the publicly available responses to the Trump administration’s request for information (RFI) on its forthcoming AI action plan. However, many submissions remained private.

On April 24th, the White House published over 10,000 RFI responses, which are all available here.

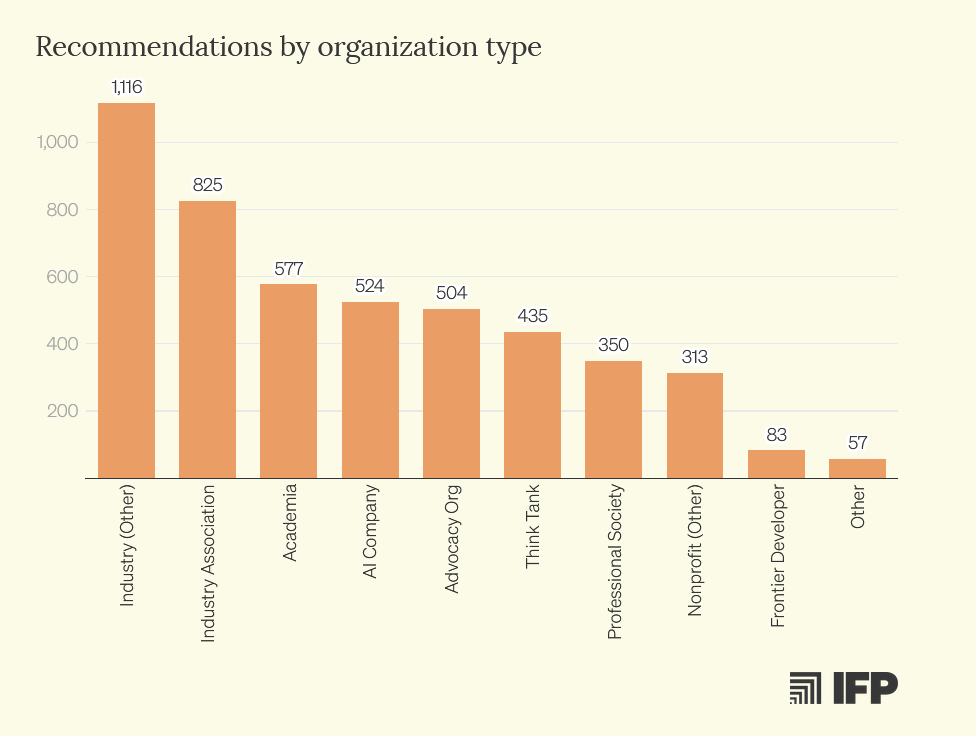

The Institute for Progress (IFP) used a large language model, Anthropic’s Claude, to analyze all these responses.

IFP found that nearly 93% of the submissions came from individuals, who often focused on copyright protections (or lack thereof) and AI’s impact on artists. Around 40% of the individual responses were anonymous or “used an obvious pseudonym,” and over 90% were “extremely short, non-substantive, and had negative sentiment.”

Of the 10,068 total submissions, IFP found a little over 700 that came from organizations and offered substantive policy recommendations. Those specific recommendations are all available in IFP’s “AI Action Plan Database.”

Here are some example submissions and recommendations:

Accelerate Science Now: “OSTP should coordinate a pilot program for leveraging expert prediction markets to inform AI R&D activities.”

AE Studio: “Create a 25% tax credit for companies investing in AI alignment and security R&D, with requirements to exceed previous year’s spending and publish findings for industry benefit.”

Amazon: “Support the National Artificial Intelligence Research Resource (NAIRR).”

American Security Fund: “Protect U.S. AI companies from shocks, especially by preserving Taiwan’s security.”

CivAI: “Schedule recurring demonstrations of cutting-edge AI systems for senior officials to build intuitive understanding of capabilities and limitations.”

ControlAI: Establish a regulator to “ensure that companies developing AI models above certain compute thresholds and general intelligence benchmarks comply with rigorous safety protocols.”

Convergence Analysis: “Initiate high-level dialogue with competitors, particularly China, focused specifically on superintelligent AI development.”

Harvard University Human Flourishing Program: “Define clear, measurable indicators across all six dimensions of flourishing that can be systematically tracked and evaluated.”

Heritage Foundation: “Explore cutting-edge technical methods for enhancing model explainability without sacrificing accuracy or performance.”

Institute for Family Studies: “Direct Workforce Development Boards (WDBs) to incorporate data on AI-related displacement risks into their local plans.”

Meta: “Government should have the expertise and resources to collaborate with industry on national security risks that enables model developers to anticipate and test for pressing security risks.”

METR: “Consider making high-frequency national measurement of R&D automation effects a top priority within the U.S. Federal statistical system.”

Microsoft: Implement “clear standards and guidelines for physical and cybersecurity measures for data centers and AI infrastructure.”

PepsiCo: “It is critical that agencies like NIST have the adequate support and funding that they need to carry out Congressional and Administration mandates.”

SemiAnalysis: “Work with BIS to ensure that subsystems of critical machinery are [export] controlled, not just the assembled machine.”

The Future Society: Run “a five-year research program focused on practical applications of fully homomorphic encryption for government and critical infrastructure.”

There are more responses from the Johns Hopkins Center for Health Security, Oxford Martin AI Governance Initiative, Cooperative AI Foundation, Utah Department of Commerce, Allen Institute for AI, Special Competitive Studies Project, APLU, AMA, NEA, NRF, IEEE, Solar Energy Industry Association, Motion Picture Association, National Association of Manufacturers, AI Policy Network, Databricks, Salesforce, Uber, Intel, Oklo, Thorn, Arm, Cohere, Mistral AI, RAND, Boeing, Adobe, Black Forest Labs, National Taxpayers Union, Center for Industrial Strategy, Lockheed Martin, Public Knowledge, Americans for Prosperity, S&P Global, GETTING-Plurality, Palo Alto Networks, SecureBio, GitHub, HiddenLayer, J.P. Morgan, AI Futures Project, Booz Allen, Snowflake, Transluce, Imbue, Fathom, SEMI, a coalition including RIAA and AFL-CIO, and many others.

CAIP News

We released new model legislation, the Responsible AI Act of 2025, designed to establish a regulatory framework for the most powerful artificial intelligence systems while ensuring continued innovation in the field.

From the archives… Last Congress, Senators Mitt Romney (R-UT), Jack Reed (D-RI), Jerry Moran (R-KS), Angus King (I-ME), and Maggie Hassan (D-NH) introduced the Preserving American Dominance in AI Act, a bill with several components resembling CAIP’s model legislation. Read about it in AI Policy Weekly #55.

Quote of the Week

Interpretability gets less attention than the constant deluge of model releases, but it is arguably more important.

—Dario Amodei, CEO of Anthropic, in an essay titled “The Urgency of Interpretability”

This edition was authored by Jakub Kraus.

If you have feedback to share, a story to suggest, or wish to share music recommendations, please drop me a note at jakub@aipolicy.us.

—Jakub