AI Policy Weekly #76

UAE, Google IO, whistleblower bill

Welcome to AI Policy Weekly, a newsletter from the Center for AI Policy (CAIP). Each issue explores three important developments in AI, curated specifically for U.S. AI policy professionals.

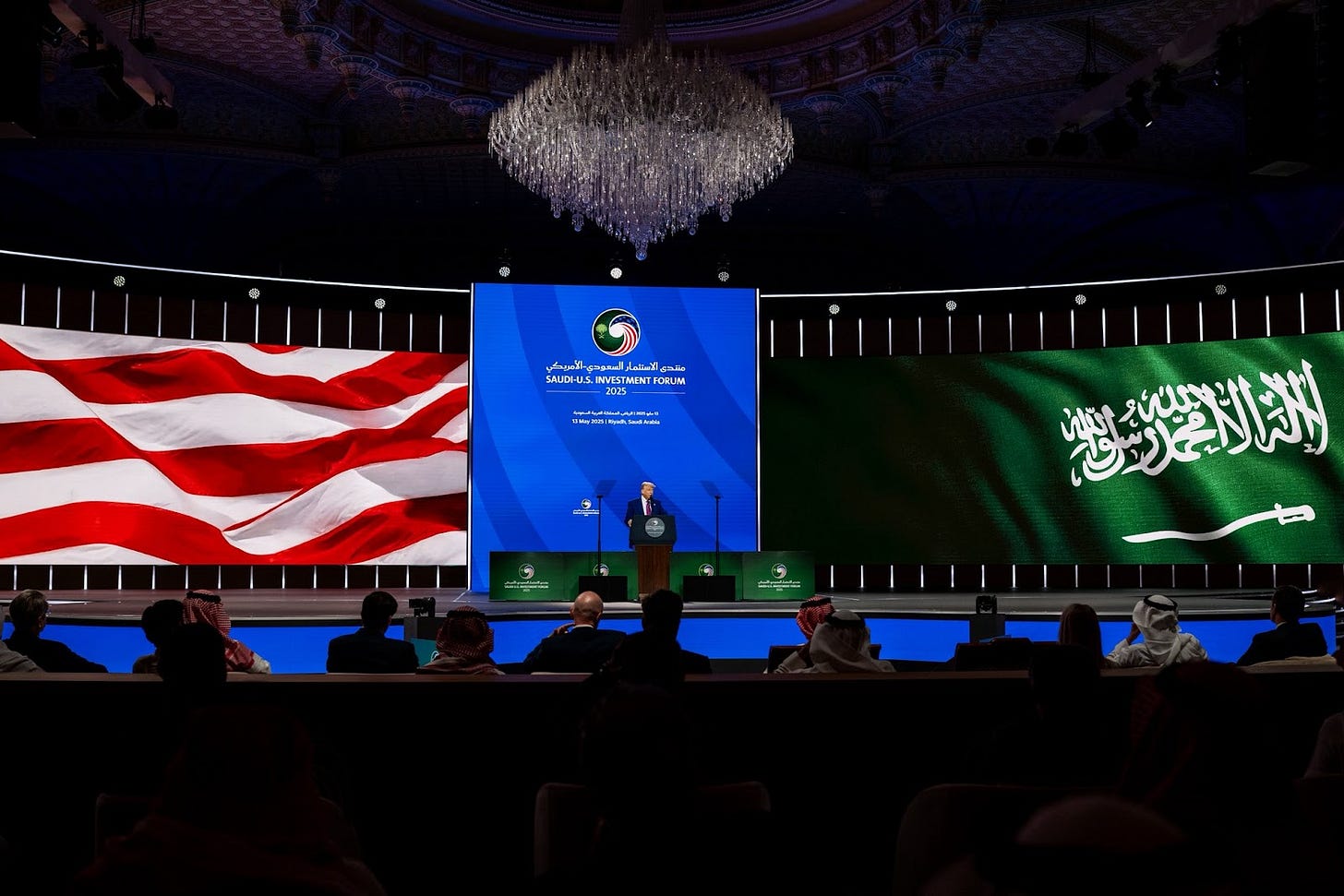

Trump Administration Makes Major AI Infrastructure Deals With Gulf States

In mid-May, President Trump made his second international trip of his second presidential term, visiting Saudi Arabia, Qatar, and the UAE.

The four-day journey included two major AI infrastructure announcements that will send hundreds of thousands of advanced American chips to the Gulf.

First, the Trump administration announced plans to build a 5-gigawatt AI campus in Abu Dhabi, enough to power millions of NVIDIA’s best AI chips. The first phase of the plan is already underway with construction of a 1-gigawatt data center.

According to chip expert Lennart Heim, this announcement is “bigger than all other major AI infrastructure announcements we’ve seen so far.”

Relatedly, the U.S. Commerce Department announced a new “U.S.-UAE AI Acceleration Partnership,” which will “bolster cooperation around critical technologies and ensure the protection of such technologies based on a set of joint commitments.”

Reuters reports that the Trump administration might let the UAE import 500,000 of NVIDIA’s most advanced AI chips per year starting in 2025.

This represents a shift from the Biden administration, which was reluctant to support UAE-based AI projects due to China’s influence in the region. The Diffusion Framework—which the Trump administration recently repealed—would’ve required AI companies to meet high security standards before deploying large quantities of chips in the UAE.

Although the Diffusion Framework is gone, a replacement rule is coming soon. Further, the U.S.-UAE deal includes “strong security guarantees to prevent diversion of U.S. technology,” according to Commerce Secretary Howard Lutnick.

A White House announcement says the UAE also committed to “invest in, build, or finance U.S. data centers that are at least as large and as powerful as those in the UAE.”

Days after Trump’s trip, OpenAI announced a new “Stargate UAE” project, including plans to build a 1-gigawatt computing cluster in Abu Dhabi. This cluster seems to be the same data center that the Commerce Department announced.

To build this new computing infrastructure, OpenAI will work with Oracle, NVIDIA, Cisco, SoftBank, and the Emirati company G42.

Before the UAE, President Trump visited Saudi Arabia. One day before his arrival, the country’s sovereign wealth fund launched a new AI operation and investment company called Humain.

The next day, Nvidia, AMD, Qualcomm, Amazon, Groq, Cisco, and Google all announced partnerships with Humain. For example, Humain plans to acquire “several hundred thousand of Nvidia’s most advanced GPUs” over the next five years.

Additionally, the U.S. company Supermicro announced a $20 billion partnership with the Saudi company DataVolt to “build hyperscale AI campuses initially in the Kingdom of Saudi Arabia.”

Unlike the UAE, Saudi Arabia did not form a broader AI partnership with the U.S. government. However, the New York Times reports that a major deal is in the works.

Chips have joined oil as the currency of Middle East relations.

Google Shares Wide Range of AI Advancements at I/O 2025

Google made many AI announcements at its annual developer conference this week. Here’s a brief overview of some of the most noteworthy advancements. Note that some tools are available to everyone today, others are available to a limited audience, and others are coming later this year.

First, there were several updates focused on AI systems that generate text:

Deep Think is an enhanced reasoning mode that makes Gemini 2.5 Pro better at math and coding.

Gemini 2.5 Flash, Google’s fastest and cheapest model, received a general performance boost.

Gemini Diffusion generates outputs significantly faster using diffusion techniques.

Gemma 3n is an open model optimized for mobile devices like smartphones and tablets.

Gmail will be able to personalize AI-generated draft replies using context from past emails and Google Drive files.

Jules, an autonomous coding agent, is now available to everyone.

Video, image, and audio generation systems also advanced:

Veo 3 can convert text prompts into videos with corresponding audio, such as musicals, commercials, standup, dialogue, and ASMR.

Imagen 4 is Google’s best text-to-image model yet.

Vids, Google’s video editing application, can generate videos of AI avatars reading custom scripts.

Video Overviews are coming to NotebookLM, adding to the existing ability to generate podcast overviews.

3D video calls are possible thanks to Google’s “state-of-the-art AI volumetric video model.”

Text-to-speech capabilities are in preview for Gemini 2.5 Pro and Flash.

Google Meet can now do near real-time speech translation between English and Spanish.

Other announcements include:

AI Mode is a chatbot-centered form of Google Search, similar to Perplexity AI.

SynthID Detector allows users to check whether content is AI-generated content from Google models.

SignGemma can analyze video of American Sign Language and translate it into English text.

Lyria 2, a text-to-music model, is now available through YouTube Shorts.

Several days before I/O, Google introduced AlphaEvolve, an AI system that semi-autonomously writes and adapts computer code to solve challenging science and engineering problems. Google has already used AlphaEvolve to operate data centers more efficiently and speed up Gemini training time, a clear case of AI advancements contributing to further AI progress.

Overall, Google’s announcements show that AI progress is not slowing down.

Senator Grassley Introduces Bipartisan Bill to Protect AI Whistleblowers

Senate Judiciary Chair Chuck Grassley (R-IA) recently introduced the AI Whistleblower Protection Act, a bipartisan bill designed to protect workers involved in AI systems from retaliation when they report security vulnerabilities, legal violations, or unaddressed AI dangers.

Additional Senate co-sponsors include Senators Chris Coons (D-DE), Marsha Blackburn (R-TN), Amy Klobuchar (D-MN.), Josh Hawley (R-MO) and Brian Schatz (D-HI). In the House, Representatives Jay Obernolte (R-CA) and Ted Lieu (D-CA) are introducing companion legislation.

The bill specifically protects whistleblowers who report an “AI security vulnerability,” defined as “any failure or lapse in security” that could enable theft of state-of-the-art AI technology. It also covers whistleblowers reporting an “AI violation,” which includes (1) violations of federal law involving AI systems, and (2) “any failure to appropriately respond to a substantial and specific danger that the development, deployment, or use of artificial intelligence may pose to public safety, public health, or national security.”

The bill prohibits employers from firing, demoting, or otherwise retaliating against individuals who lawfully report AI security vulnerabilities or violations to regulators, the Attorney General, Congress, or appropriate company officials. These protections extend to both employees and independent contractors, including former workers.

Whistleblowers who experience retaliation can file a complaint with the Department of Labor. If the Labor Department fails to issue a final decision within 180 days—provided the delay is not due to the whistleblower’s actions—the individual could pursue their case through a jury trial in federal court.

Whistleblowers who succeed in court would be entitled to reinstatement, twice the amount of lost wages, compensatory damages (including attorney’s fees), and any other relief deemed appropriate by the court.

Importantly, employers cannot force whistleblowers to give up these protections.

The Center for AI Policy firmly endorsed the legislation in Senator Grassley’s press release, describing it as a “strong and effective” measure that Congress should advance “without delay.”

CAIP News

On Tuesday, May 20th, the Center for AI Policy held a panel discussion in the Rayburn House Office Building on the Progress and Policy Implications of Advanced Agentic AI. A video recording is available here.

Joe Kwon wrote a detailed research report titled “AI Agents: Governing Autonomy in the Digital Age.”

ICYMI: Joe Kwon published an article in Tech Policy Press titled “Democracy in the Dark: Why AI Transparency Matters.”

From the archives… Last September, Jason Green-Lowe traveled to New York City to hear perspectives on AI’s risks and rewards from relevant private sector employees. He wrote a blog post with lessons from the trip: “Reflections on AI in the Big Apple.”

Quote of the Week

We could wake up very soon in a world where there is no cybersecurity. Where the idea of your bank account being safe and secure is just a relic of the past. Where there’s weird shit happening in space mediated through AI that makes our communications infrastructure either actively hostile or at least largely inept and inert. So, yeah, I’m worried about this stuff.

—Vice President JD Vance, discussing the potential downsides of AI in a podcast interview

This edition was authored by Jakub Kraus.

If you have feedback to share, a story to suggest, or wish to share music recommendations, please drop me a note at jakub@aipolicy.us.

—Jakub

AI infrastructure partnerships will change data sovereignty and access, which is an intriguing geopolitical development. In relation to digital access, I've been using FastVPN https://apps.apple.com/us/app/fastvpn-secure-private/id1381516895 , which I downloaded from the App Store, and it's been really reliable all around. Excellent for gaining access to global news and research without being limited by geography; consistently fast speeds and an easy-to-use interface. Battery usage is noticeable and it occasionally takes a moment to reconnect after network changes, but these are minor problems. Overall, great for maintaining global awareness and safeguarding privacy when studying delicate geopolitical subjects.